Back in 2018 I posted my annual Christmas List of STEM Toys and the Piper Computer Kit 2 was on the list. My kids love this little wooden "laptop" comprised of a Raspberry Pi and an LCD screen. You spend time going through curated episodes of custom content and build and wire the computer LIVE while it's on!

Back in 2018 I posted my annual Christmas List of STEM Toys and the Piper Computer Kit 2 was on the list. My kids love this little wooden "laptop" comprised of a Raspberry Pi and an LCD screen. You spend time going through curated episodes of custom content and build and wire the computer LIVE while it's on!

The Piper folks saw my post and asked me to take a look at the BETA of their Piper Command Center, so my sons and I jumped at the chance. They are actively looking for feedback. It's a chance to build our own game controller!

The Piper Command Center BETA already has a ton of online content and things to try. Their "firmware" is an Arduino sketch and it's all up on GitHub. You'll want to get the Arduino IDE from the Windows Store.

Today the Command Center can look like a Keyboard or a Mouse.

- In Mouse Mode (default), the joystick controls cursor movement and the left and right buttons mimic left and right mouse clicks.

- In Keyboard Mode, the joystick mimics the arrow keys on a keyboard, and the buttons mimic Space Bar (Up), Z (Left), X (Down), and C (Right) keys on a keyboard.

Once it's built you can use the controller to play games in your browser, or soon, with new content on the Piper itself, which runs Minecraft usually. However, you DO NOT need the Piper to get the Piper Command Center. They are separate but complementary devices.

Assemble a real working game controller, understand the basics of an Arduino, and discover physical computing by configuring a joystick, buttons, and more. Ideal for ages 13+.

My son is looking at how he can modify the "firmware" on the Command Center to allow him to play emulators in the browser.

The Piper Command Center comes unassembled, of course, and you get to put it together with a cool blueprint instruction sheet. We had some fun with the wiring and a were off by one a few times, but they've got a troubleshooting video that helped us through it.

It's a nice little bit of kit and I love that it's made of wood. I'd like to see one with a second joystick that could literally emulate an XInput control pad, although that might be more complex than just emulating a mouse or keyboard.

Go check it out. We're happy with it and we're looking forward to whatever direction it goes. The original Piper has updated itself many times in a few years we've had it, and we upgraded it to a 16gig SD Card to support the latest content and OS update.

Piper Command Center is in BETA and will be updated and actively developed as they explore this space and what they can do with the device. As of the time of this writing there were five sketches for this controller.

Sponsor: Manage GitHub Pull Requests right from the IDE with the latest JetBrains Rider. An integrated performance profiler on Windows comes to the rescue as well.

© 2018 Scott Hanselman. All rights reserved.

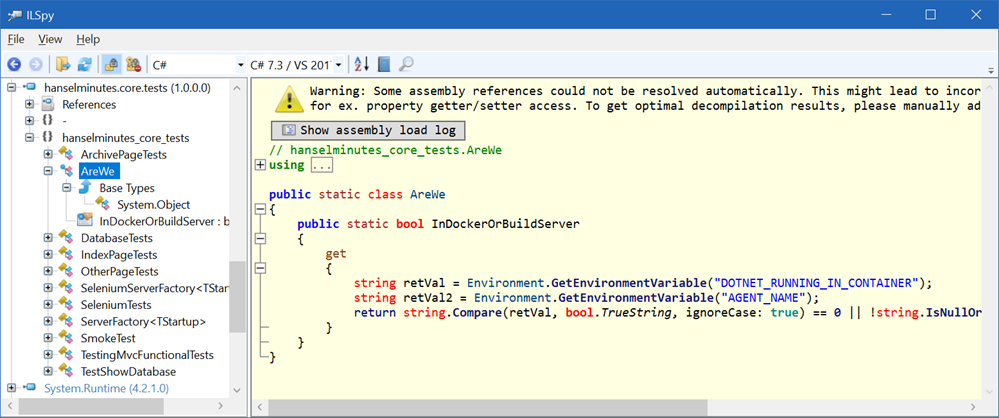

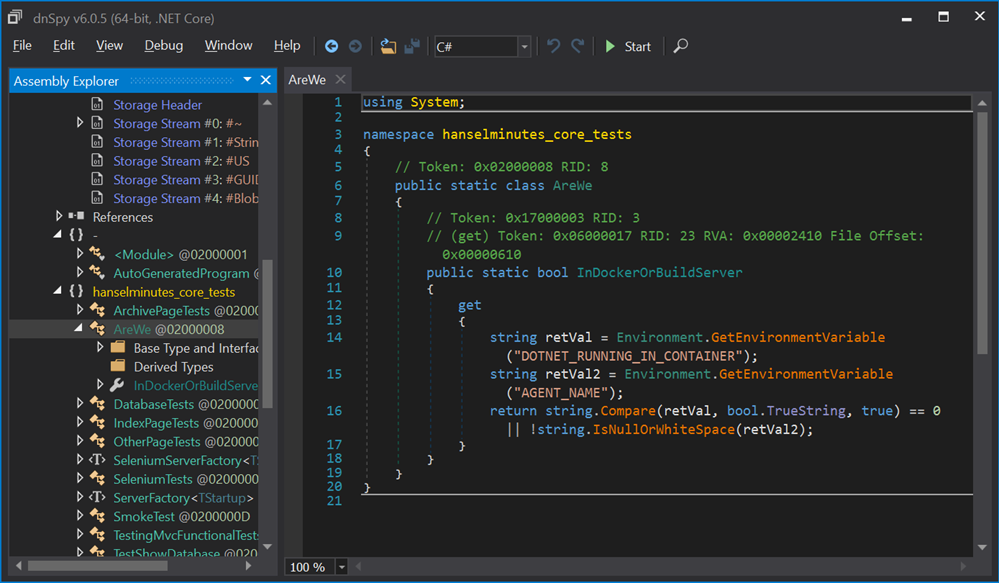

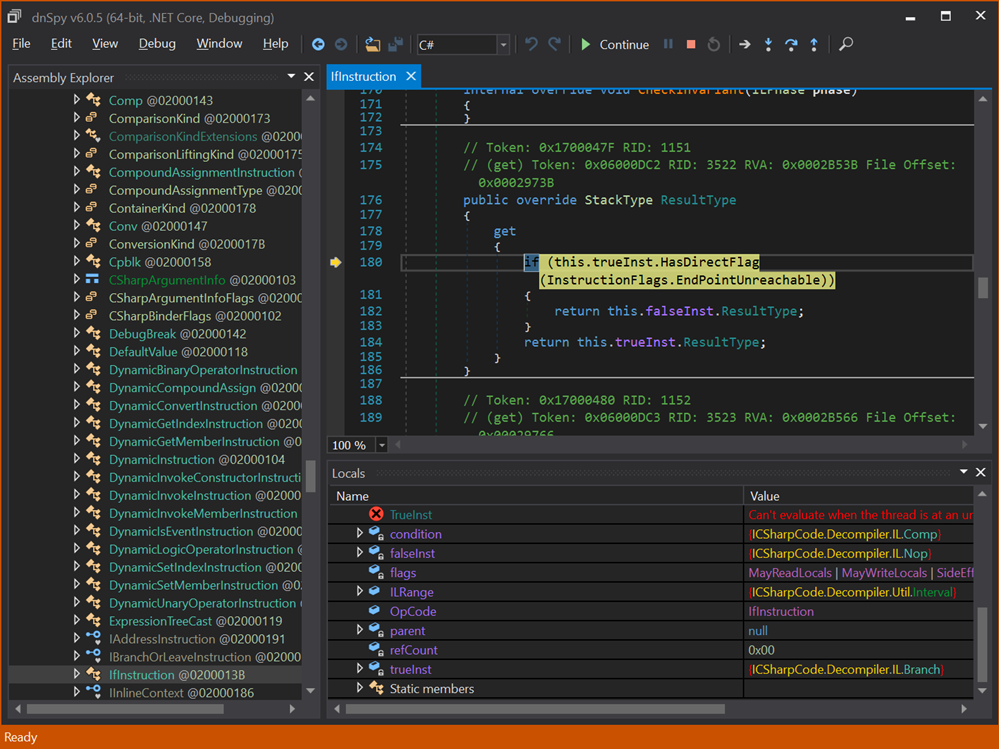

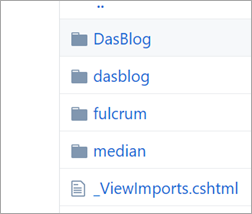

I recently needed to refactor

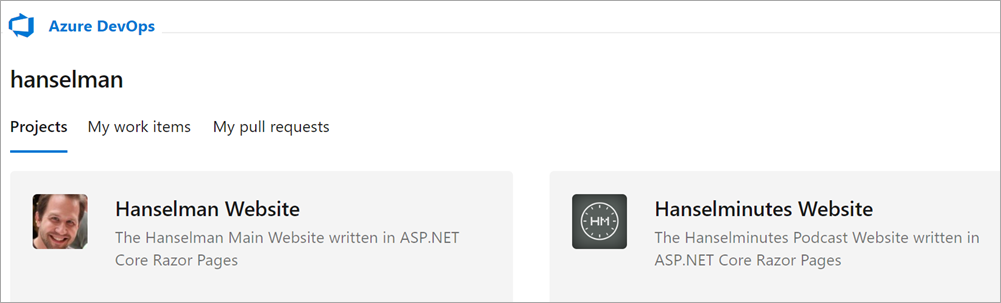

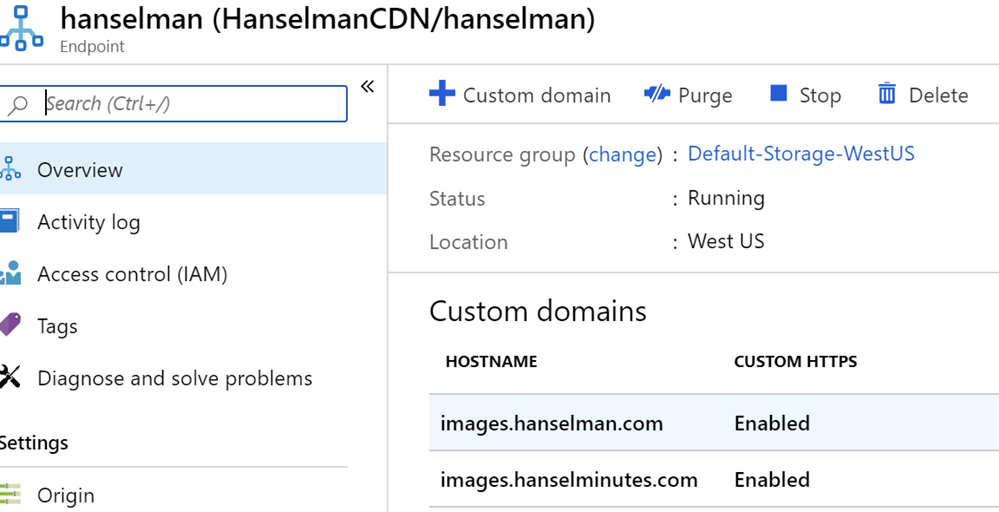

I recently needed to refactor  I don’t speak in hyperbole very often, and I want to make sure that you all understand what a big deal this is for the

I don’t speak in hyperbole very often, and I want to make sure that you all understand what a big deal this is for the

Just hours after I got off stage speaking on this very topic at

Just hours after I got off stage speaking on this very topic at  It absolutely cannot be overstated how many people keep this community alive, from early python libraries that talked to insulin pumps, to man in the middle attacks to gain access to our own data, to custom hardware boards created to bridge the new and the old.

It absolutely cannot be overstated how many people keep this community alive, from early python libraries that talked to insulin pumps, to man in the middle attacks to gain access to our own data, to custom hardware boards created to bridge the new and the old.

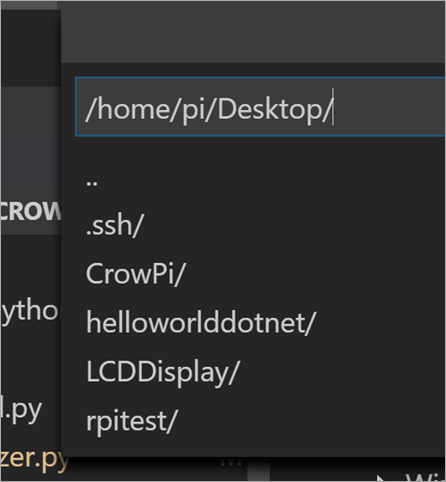

I'm

I'm

m a huge fan of

m a huge fan of