![Availability Tests Availability Tests]() ASP.NET Core 2.2 is out and released and upgrading my podcast site was very easy. Once I had it updated I wanted to take advantage of some of the new features.

ASP.NET Core 2.2 is out and released and upgrading my podcast site was very easy. Once I had it updated I wanted to take advantage of some of the new features.

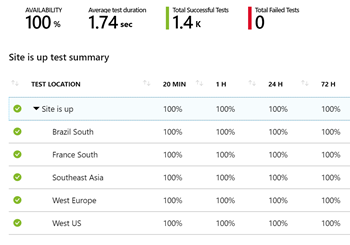

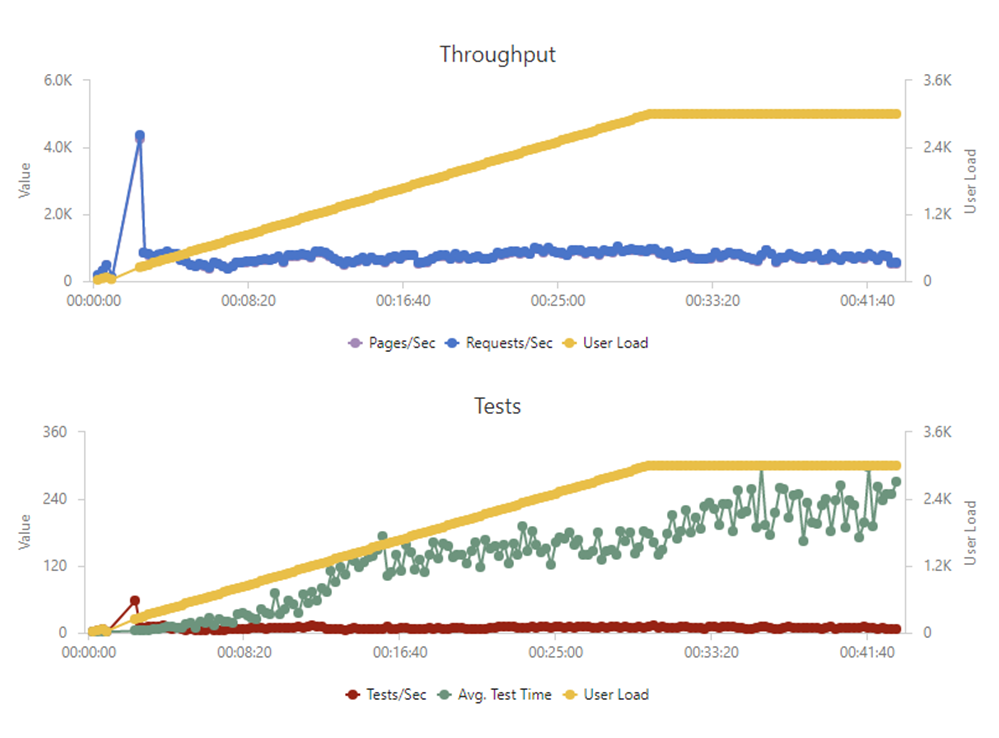

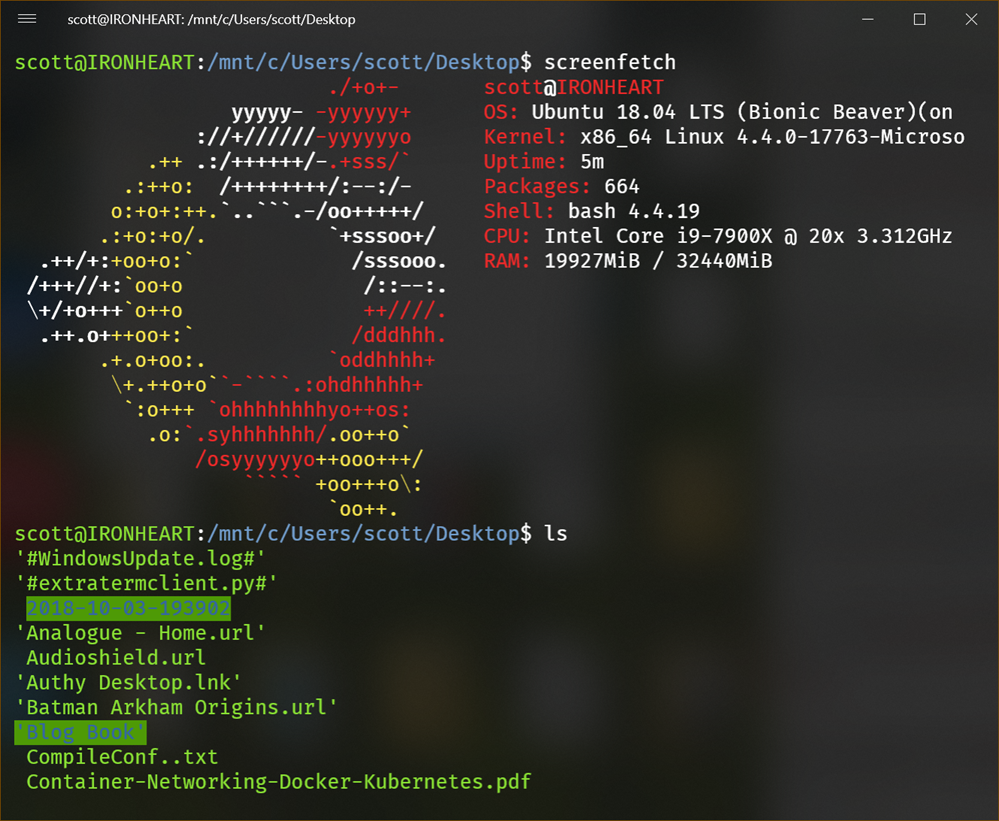

For example, I have used a number of "health check" services like elmah.io, pingdom.com, or Azure's Availability Tests. I have tests that ping my website from all over the world and alert me if the site is down or unavailable.

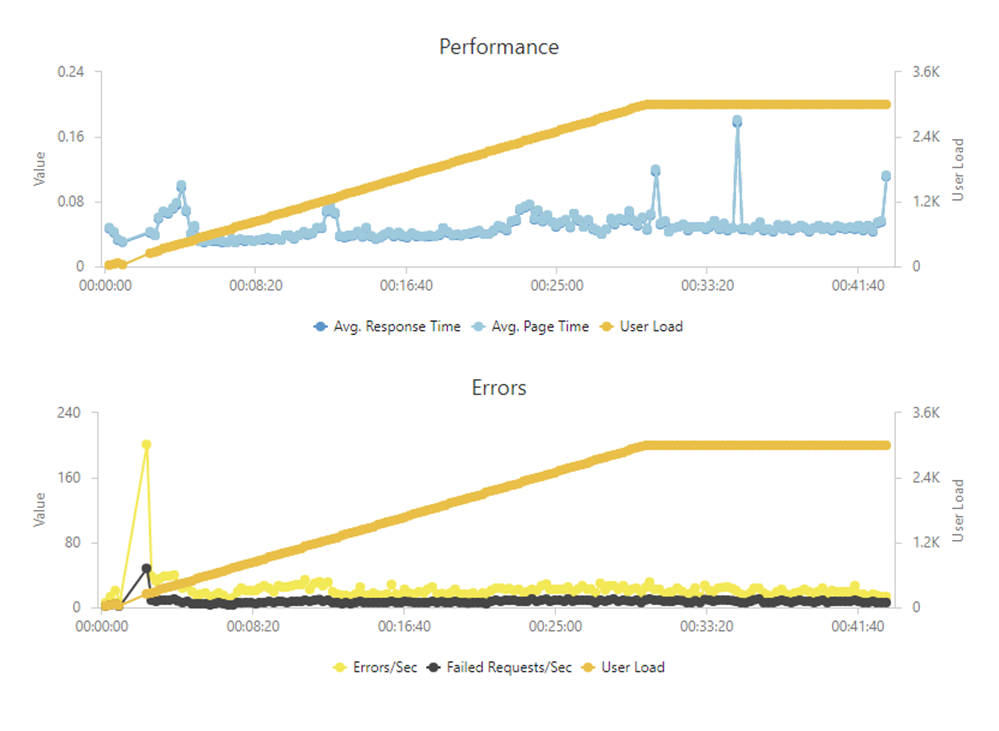

I've wanted to make my Health Endpoint Monitoring more formal. You likely have a service that does an occasional GET request to a page and looks at the HTML, or maybe just looks for an HTTP 200 Response. For the longest time most site availability tests are just basic pings. Recently folks have been formalizing their health checks.

You can make these tests more robust by actually having the health check endpoint check deeper and then return something meaningful. That could be as simple as "Healthy" or "Unhealthy" or it could be a whole JSON payload that tells you what's working and what's not. It's up to you!

![image image]()

Is your database up? Maybe it's up but in read-only mode? Are your dependent services up? If one is down, can you recover? For example, I use some 3rd party back-end services that might be down. If one is down I could used cached data but my site is less than "Healthy," and I'd like to know. Is my disk full? Is my CPU hot? You get the idea.

You also need to distinguish between a "liveness" test and a "readiness" test. Liveness failures mean the site is down, dead, and needs fixing. Readiness tests mean it's there but perhaps isn't ready to serve traffic. Waking up, or busy, for example.

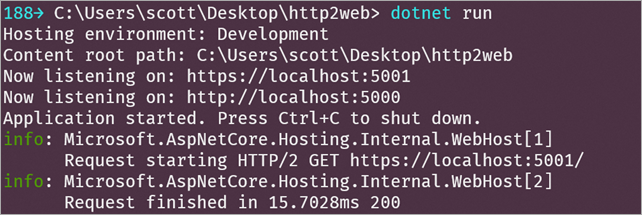

If you just want your app to report it's liveness, just use the most basic ASP.NET Core 2.2 health check in your Startup.cs. It'll take you minutes to setup.

// Startup.cs

public void ConfigureServices(IServiceCollection services)

{

services.AddHealthChecks(); // Registers health check services

}

public void Configure(IApplicationBuilder app)

{

app.UseHealthChecks("/healthcheck");

}

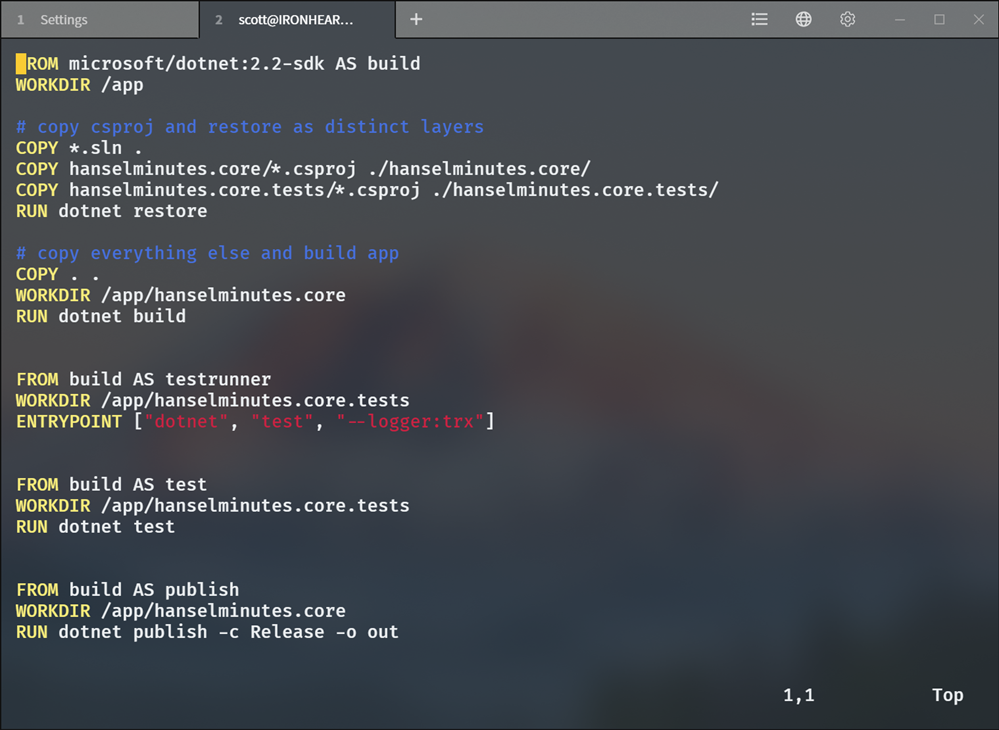

Now you can add a content check in your Azure or Pingdom, or tell Docker or Kubenetes if you're alive or not. Docker has a HEALTHCHECK directive for example:

# Dockerfile

...

HEALTHCHECK CMD curl --fail http://localhost:5000/healthcheck || exit

If you're using Kubernetes you could hook up the Healthcheck to a K8s "readinessProbe" to help it make decisions about your app at scale.

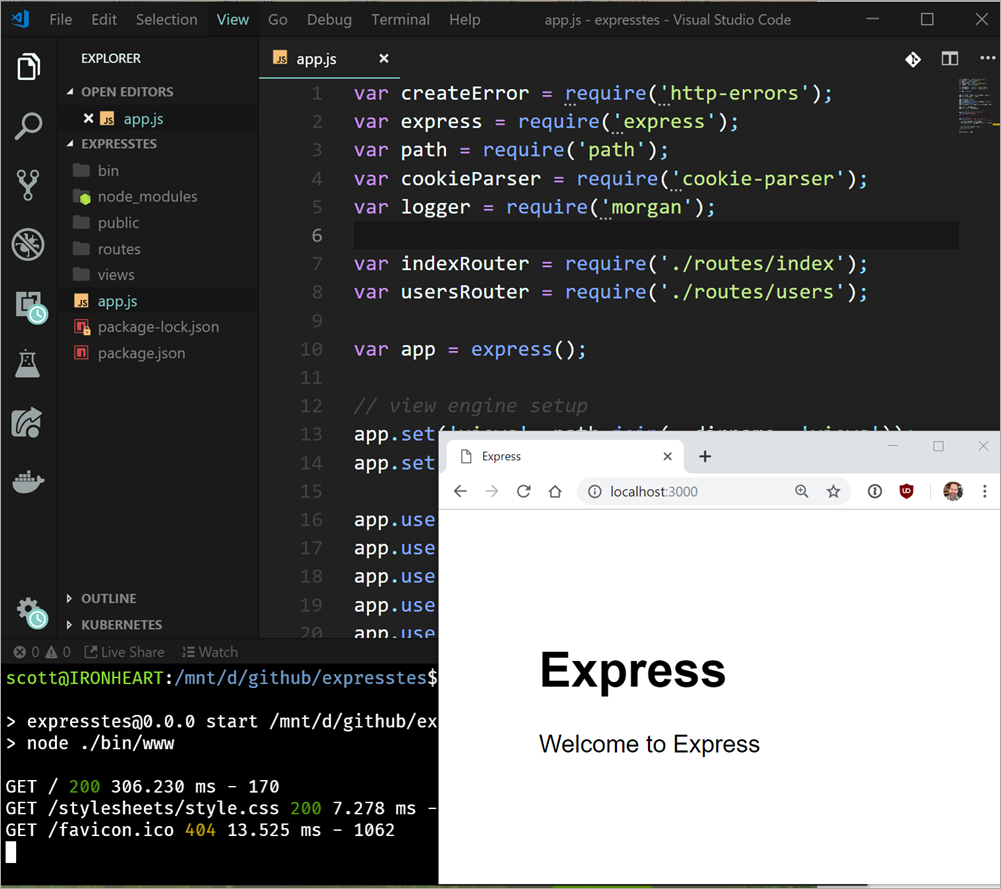

Now, since determining "health" is up to you, you can go as deep as you'd like! The BeatPulse open source project has integrated with the ASP.NET Core Health Check API and set up a repository at https://github.com/Xabaril/AspNetCore.Diagnostics.HealthChecks that you should absolutely check out!

Using these add on methods you can check the health of everything - SQL Server, PostgreSQL, Redis, ElasticSearch, any URI, and on and on. Just add the package you need and then add the extension you want.

You don't usually want your health checks to be heavy but as I said, you could take the results of the "HealthReport" list and dump it out as JSON. If this is too much code going on (anonymous types, all on one line, etc) then just break it up. Hat tip to Dejan.

app.UseHealthChecks("/hc",

new HealthCheckOptions {

ResponseWriter = async (context, report) =>

{

var result = JsonConvert.SerializeObject(

new {

status = report.Status.ToString(),

errors = report.Entries.Select(e => new { key = e.Key, value = Enum.GetName(typeof(HealthStatus), e.Value.Status) })

});

context.Response.ContentType = MediaTypeNames.Application.Json;

await context.Response.WriteAsync(result);

}

});

At this point my endpoint doesn't just say "Healthy," it looks like this nice JSON response.

{

status: "Healthy",

errors: [ ]

}

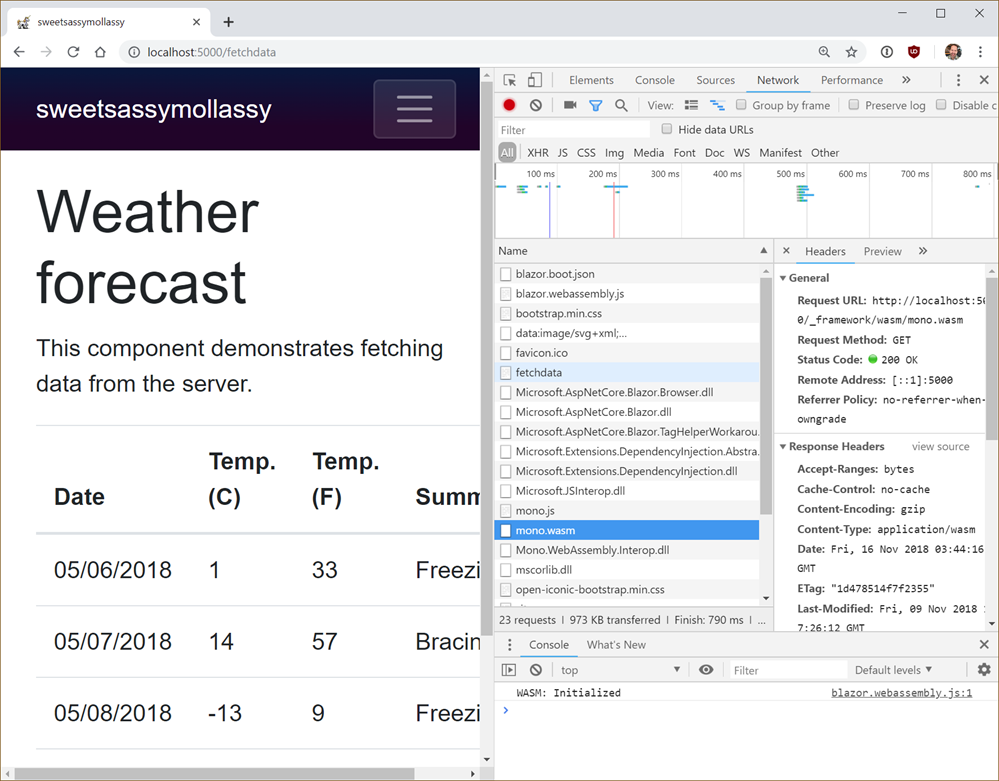

I could add a Url check for my back end API. If it's down (or in this case, unauthorized) I'll get this a nice explanation. I can decide if this means my site is unhealthy or degraded. I'm also pushing the results into Application Insights which I can then query on and make charts against.

services.AddHealthChecks()

.AddApplicationInsightsPublisher()

.AddUrlGroup(new Uri("https://api.simplecast.com/v1/podcasts.json"),"Simplecast API",HealthStatus.Degraded)

.AddUrlGroup(new Uri("https://rss.simplecast.com/podcasts/4669/rss"), "Simplecast RSS", HealthStatus.Degraded);

Here is the response, cool, eh?

{

status: "Degraded",

errors: [

{

key: "Simplecast API",

value: "Degraded"

},

{

key: "Simplecast RSS",

value: "Healthy"

}

]

}

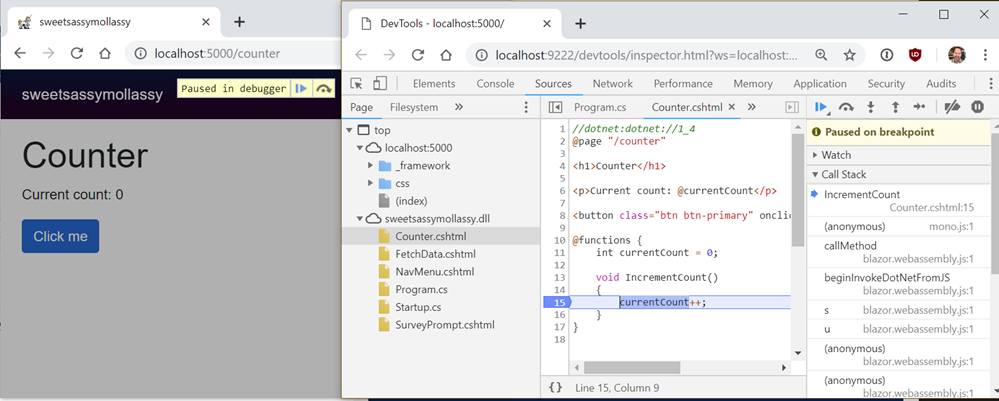

This JSON is custom, but perhaps I could use the a built in writer for a free reasonable default and then hook up a free default UI?

app.UseHealthChecks("/hc", new HealthCheckOptions()

{

Predicate = _ => true,

ResponseWriter = UIResponseWriter.WriteHealthCheckUIResponse

});

app.UseHealthChecksUI(setup => { setup.ApiPath = "/hc"; setup.UiPath = "/healthcheckui";);

Then I can hit /healthcheckui and it'll call the API endpoint and I get a nice little bootstrappy client-side front end for my health check. A mini dashboard if you will. I'll be using Application Insights and the API endpoint but it's nice to know this is also an option!

If I had a database I could check one or more of those for health well. The possibilities are endless and up to you.

public void ConfigureServices(IServiceCollection services)

{

services.AddHealthChecks()

.AddSqlServer(

connectionString: Configuration["Data:ConnectionStrings:Sql"],

healthQuery: "SELECT 1;",

name: "sql",

failureStatus: HealthStatus.Degraded,

tags: new string[] { "db", "sql", "sqlserver" });

}

It's super flexible. You can even set up ASP.NET Core Health Checks to have a webhook that sends a Slack or Teams message that lets the team know the health of the site.

Check it out. It'll take less than an hour or so to set up the basics of ASP.NET Core 2.2 Health Checks.

Sponsor: Preview the latest JetBrains Rider with its Assembly Explorer, Git Submodules, SQL language injections, integrated performance profiler and more advanced Unity support.

© 2018 Scott Hanselman. All rights reserved.

There's a great new bunch of guidance just published representing Best Practices for creating .NET Libraries. Best of all, it was shepherded by JSON.NET's James Newton-King. Who better to help explain the best way to build and publish a .NET library than the author of the world's most popular open source .NET library?

There's a great new bunch of guidance just published representing Best Practices for creating .NET Libraries. Best of all, it was shepherded by JSON.NET's James Newton-King. Who better to help explain the best way to build and publish a .NET library than the author of the world's most popular open source .NET library?

I've been exploring automated dependency tracking lately. I usually use my podcast's ASP.NET Core website that I host on Github as a guinea pig. I tried

I've been exploring automated dependency tracking lately. I usually use my podcast's ASP.NET Core website that I host on Github as a guinea pig. I tried

I've done IoT work on Raspberry Pis which is much easier lately with the emerging

I've done IoT work on Raspberry Pis which is much easier lately with the emerging

I love dashboards. I love Raspberry Pis (tiny $35 computers the size of a set of playing cards). And I'm

I love dashboards. I love Raspberry Pis (tiny $35 computers the size of a set of playing cards). And I'm

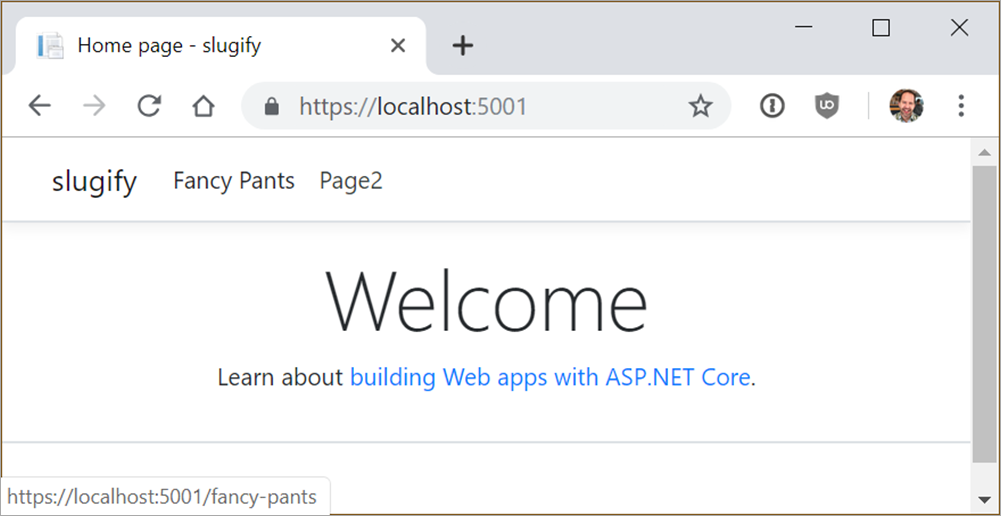

Naming things is hard. I've talked

Naming things is hard. I've talked