I was talking to my friend Rob Caron today. He produces Azure Friday with me - it's our weekly video podcast on Azure and the Cloud. We were talking about the magic for a successful episode, but then realized the ingredients that Rob came up with were generic enough that they were the essential for anyone who is teaching or advocating for a technology.

I was talking to my friend Rob Caron today. He produces Azure Friday with me - it's our weekly video podcast on Azure and the Cloud. We were talking about the magic for a successful episode, but then realized the ingredients that Rob came up with were generic enough that they were the essential for anyone who is teaching or advocating for a technology.

Personally I don't believe in "evangelism" in a technical context and I dislike the term "Technology Evangelism." Not only does it evoke unnecessary zealotry but it also implies that your religion technology is not only what's best for someone, but that it's the only solution. Java people shouldn't try to convert PHP people. That's all nonsense, of course. I like the word "advocate" because you're (hopefully) advocating for the right solution regardless of technology.

Here's the 11 herbs and spices that are needed for a great technical talk, a good episode of a podcast or show, or a decent career talking and teaching about tech.

- Empathy for the guest – When talking to another person, never let someone flounder and fail – compensate when necessary so they are successful.

- Empathy for the audience – Stay conscious that you're delivering an talk/episode/post that people want to watch/read.

- Improvisation – Learn how to think on your feet and keep the conversation going (“Yes, and…”) Consider ComedySportz or other mind exercises.

- Listening – Don't just wait to for your turn to speak, just to say something, and never interrupt to say it. Be present and conscious and respond to what you’re hearing

- Speaking experience – Do the work. Hundreds of talks. Hundreds of interviews. Hundreds of shows. This ain’t your first rodeo. Being good means hard work and putting in the hours, over years, whether it's 10 people in a lunch presentation or 2000 people in a keynote, you know what to articulate.

- Technical experience – You have to know the technology. Strive to have context and personal experiences to reference. If you've never built/shipped/deployed something real (multiple times) you're just talking.

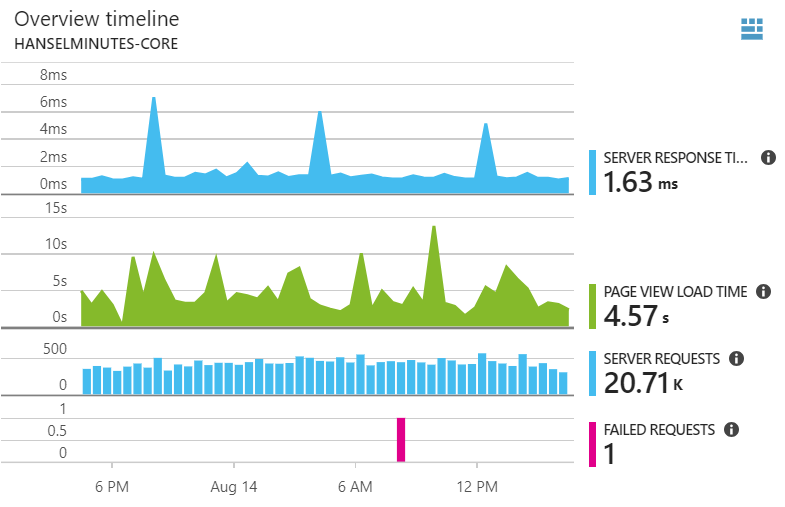

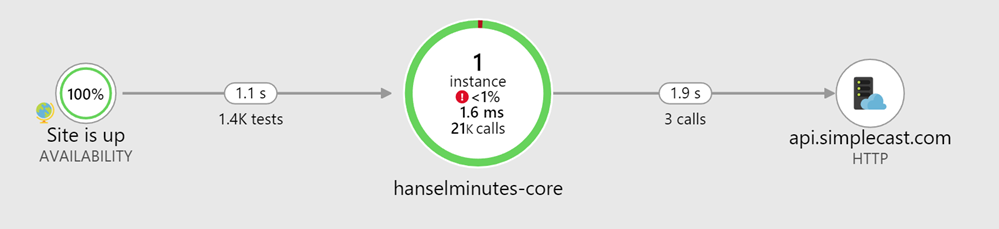

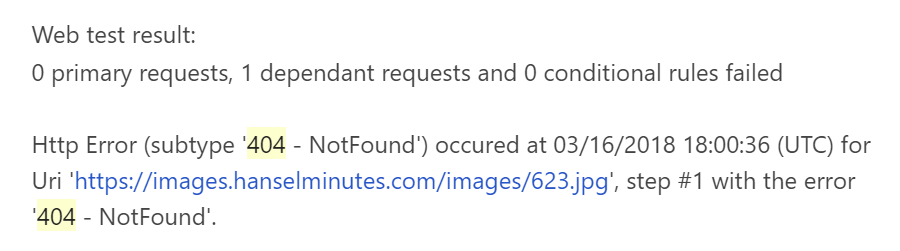

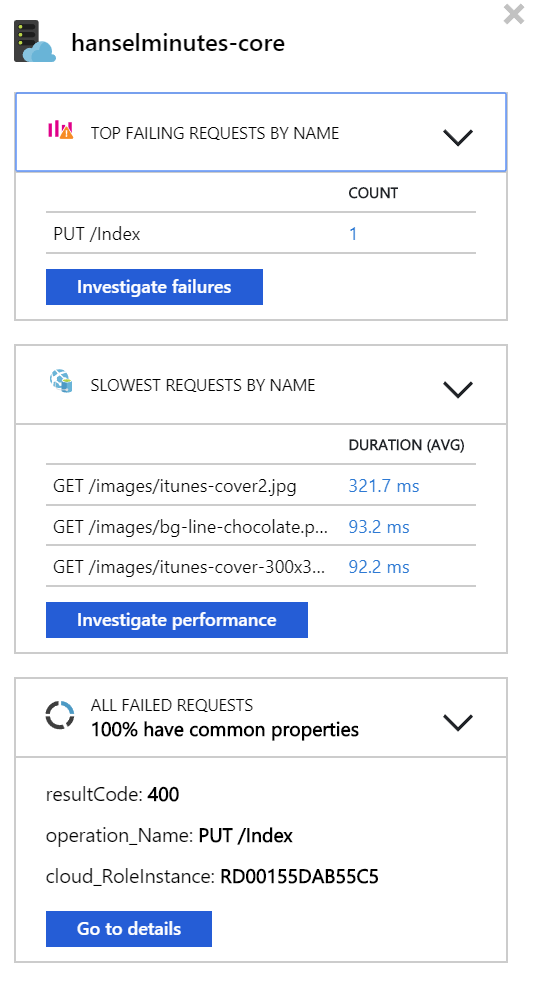

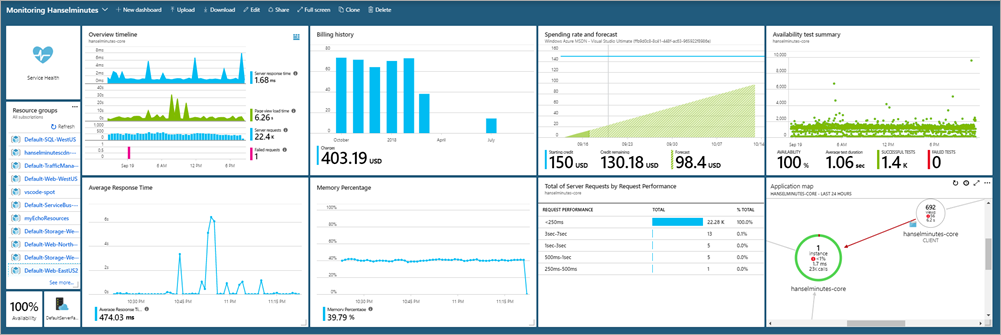

- Be a customer – You use the product, every day, and more than just to demo stuff. Run real sites, ship real apps, multiple times. Maintain sites, have sites go down and wake up to fix them. Carry the proverbial pager.

- Physical mannerisms – Avoid having odd personal ticks and/or be conscious of your performance on video. I know what my ticks are and I'm always trying to correct them. It's not self-critical, it's self-aware.

- Personal brand – I'm not a fan of "personal branding" but here's how I think of it. Show up. (So important.) You’re a known quantity in the community. You're reliable and kind. This lends credibility to your projects. Lend your voice and amplify others. Be yourself consistently and advocate for others, always.

- Confidence – Don't be timid in what you have to say BUT be perfectly fine with saying something that the guest later corrects. You're NOT the smartest person in the room. It's OK just to be a person in the room.

- Production awareness – Know how to ensure everything is set to produce a good presentation/blog/talk/video/sample (font size, mic, physical blocking, etc.) Always do tech checks. Always.

These are just a few tips but they've always served me well. We've done 450 episodes of Azure Friday and I've done nearly 650 episodes of the Hanselminutes Tech Podcast. Please Subscribe!

Related Links

- VIDEO: How to get started with technical public speaking!

- 11 Top Tips for a Successful Technical Presentation

- Technical Presentations: Be Prepared for Absolute Chaos

- Tips for Preparing for a Technical Presentation

* pic from stevebustin used under CC.

Sponsor: Preview the latest JetBrains Rider with its built-in spell checking, initial Blazor support, partial C# 7.3 support, enhanced debugger, C# Interactive, and a redesigned Solution Explorer.

© 2018 Scott Hanselman. All rights reserved.

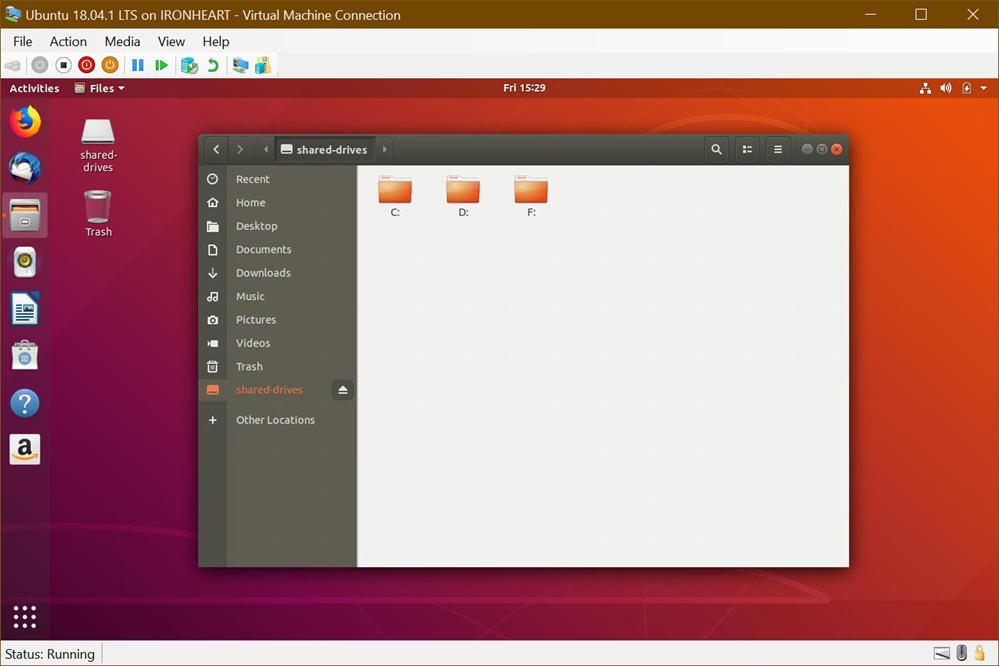

It's been 7 years since the last time I built "

It's been 7 years since the last time I built "

_619e195c-ef65-4d50-907c-8db472f4ecb2.png)

If you've looked at csproj (C# (csharp) projects) in the past in a text editor you probably looked away quickly. They are effectively MSBuild files that orchestrate the build process. Phrased differently, a csproj file is an instance of an MSBuild file.

If you've looked at csproj (C# (csharp) projects) in the past in a text editor you probably looked away quickly. They are effectively MSBuild files that orchestrate the build process. Phrased differently, a csproj file is an instance of an MSBuild file.

I was looking at a Well Known Open Source Project on GitHub today. It had like 978 Pull Requests. A "PR" means "hey here's some code I did for your project, you can PULL it from here and merge it into your code!"

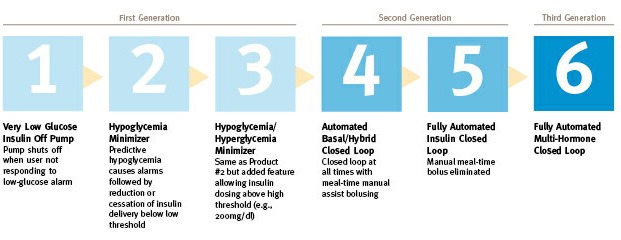

I was looking at a Well Known Open Source Project on GitHub today. It had like 978 Pull Requests. A "PR" means "hey here's some code I did for your project, you can PULL it from here and merge it into your code!" For some of the newer insulins like the ones I use, I pay as much as $296 a bottle. I have a Health Savings Plan so this is often out of pocket until I hit the limit for the year.

For some of the newer insulins like the ones I use, I pay as much as $296 a bottle. I have a Health Savings Plan so this is often out of pocket until I hit the limit for the year.

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_1.png)

_thumb_2.png)

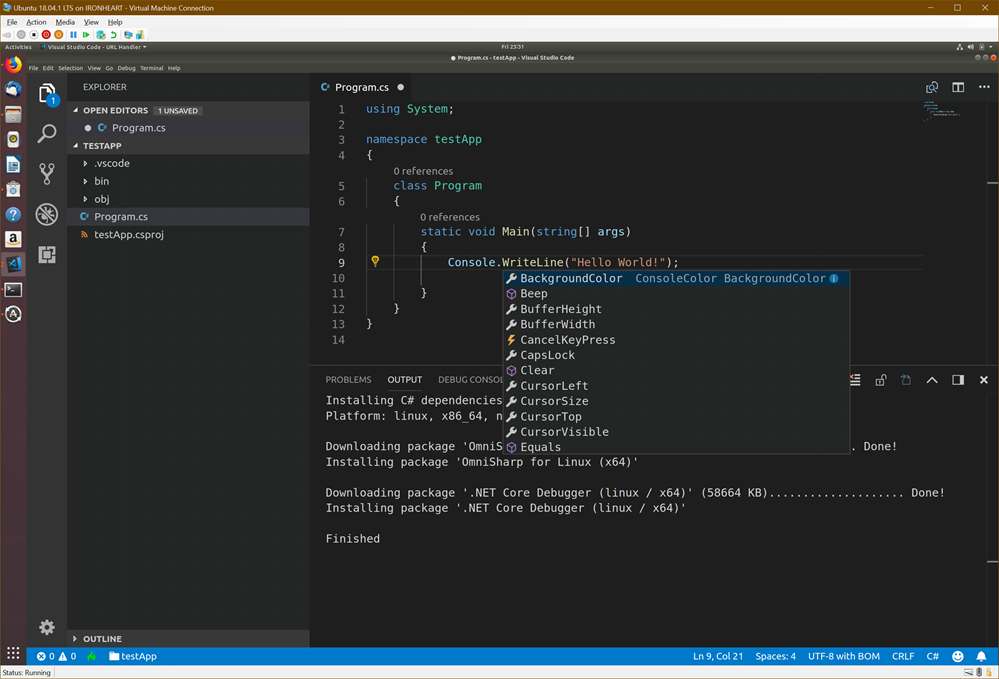

.NET Core is lovely. It's usage is skyrocketing, it's open source, and .NET Core 2.1 has some amazing performance improvements. Just

.NET Core is lovely. It's usage is skyrocketing, it's open source, and .NET Core 2.1 has some amazing performance improvements. Just

My that's a lot of acronyms. REPL means "Read Evaluate Print Loop." You know how you can run "python" and then just type 2+2 and get answer? That's a type of REPL.

My that's a lot of acronyms. REPL means "Read Evaluate Print Loop." You know how you can run "python" and then just type 2+2 and get answer? That's a type of REPL.

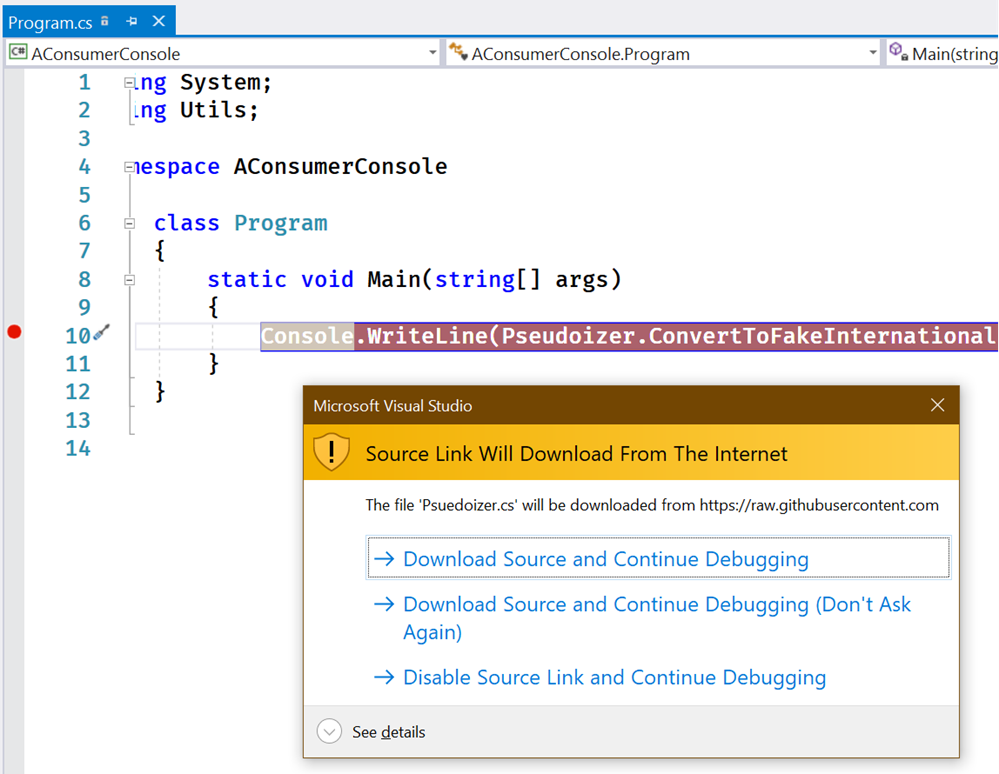

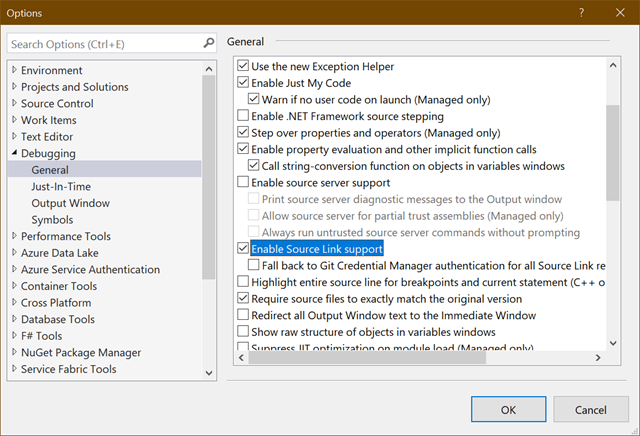

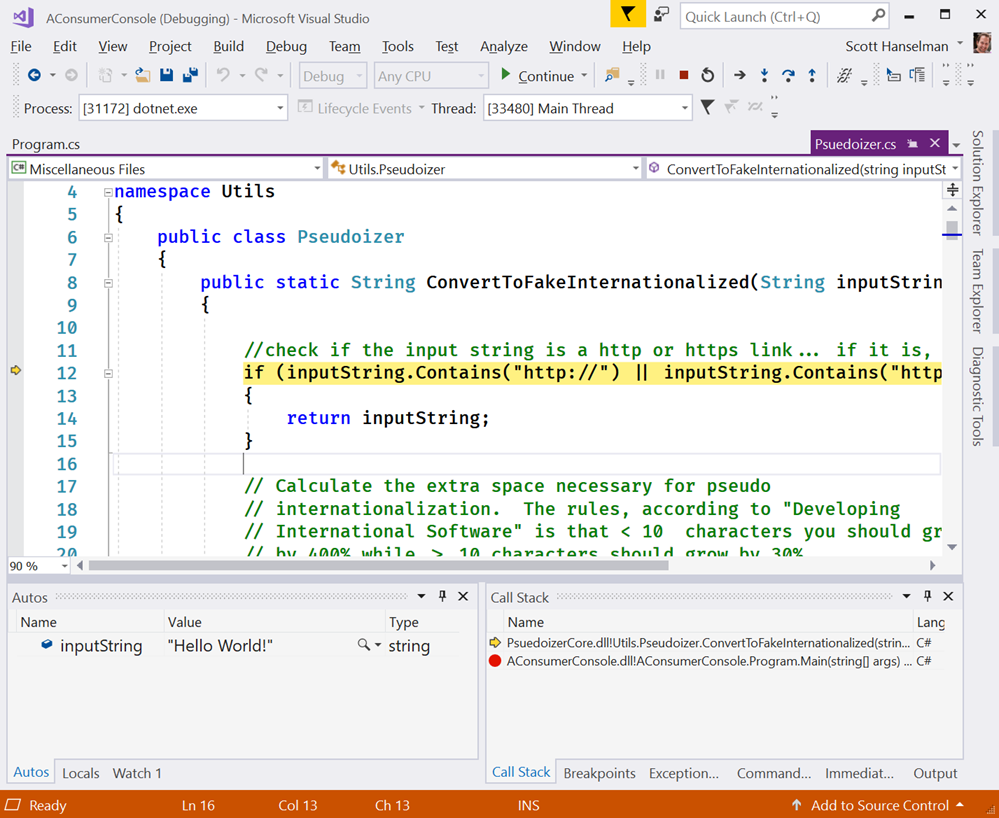

You likely know that open source .NET Core is cross platform and it's super easy to do "Hello World" and start writing some code.

You likely know that open source .NET Core is cross platform and it's super easy to do "Hello World" and start writing some code.