I got a tweet from Stevö John who said he found my existing light theme for my blog to be jarring as he lives in Dark Mode. I had never really thought about it before, but once he said it, it was obvious. Not only should I support dark mode, but I should detect the user's preference and switch seamlessly. I should also support changing modes if the browser or OS changes as well. Stevö was kind enough to send some sample CSS and a few links so I started to explore the topic.

I got a tweet from Stevö John who said he found my existing light theme for my blog to be jarring as he lives in Dark Mode. I had never really thought about it before, but once he said it, it was obvious. Not only should I support dark mode, but I should detect the user's preference and switch seamlessly. I should also support changing modes if the browser or OS changes as well. Stevö was kind enough to send some sample CSS and a few links so I started to explore the topic.

There's a few things here to consider when using prefers-color-scheme and detecting dark mode:

- Using the existing theme as much as possible.

- I don't want to have a style.css and a style-dark.css if I can avoid it. Otherwise it'd be a maintenance nightmare.

- Make it work on all my sites

- I have three logical sites that look like two to you, Dear Reader. I have hanselman.com, hanselman.com/blog, and hanselminutes.com. They do share some CSS rules but they are written in different sub-flavors of ASP.NET

- Consider 3rd party widgets

- I use a syntax highlighter (very very old) for my blog, and I use a podcast HTML5 player from Simplecast for my podcast. I'd hate to dark mode it all and then have a big old LIGHT MODE podcast player scaring people away. As such, I need the context to flow all the way through.

- Consider the initial state of the page as well as the stage changing.

- Sure, I could just have the page look good when you load it and if you change modes (dark to light and back) in the middle of viewing my page, it should also change, right? And also consider all the requirements above.

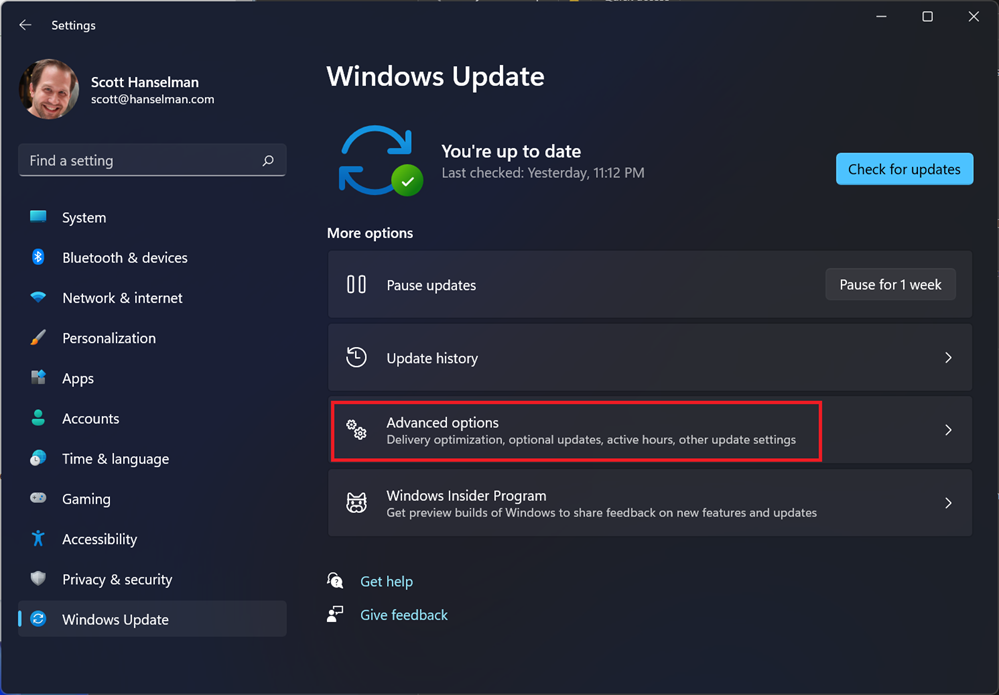

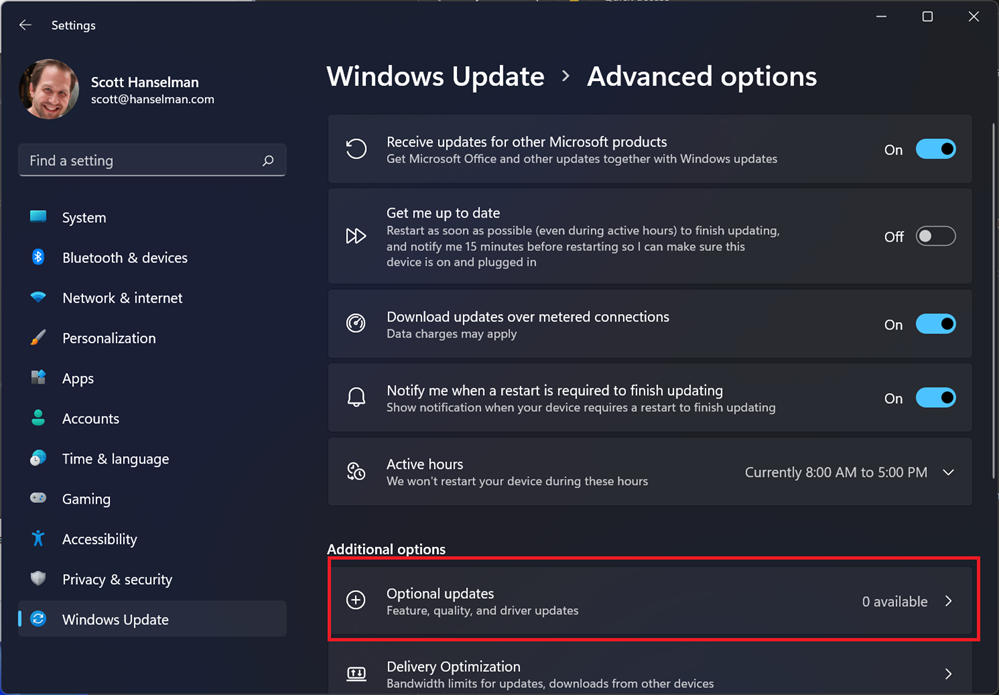

You can set your Chrome/Edge browser to use System Settings, Light, or Dark. Search for Theme in Settings.

All this, and I can only do it on my lunch hour because this blog isn't my actual day job. Let's go!

The prefers-color-scheme CSS Media Query

I love CSS @media queries and have used them for many years to support mobile and tablet devices. Today they are a staple of responsive design. Turns out you can just use a @media query to see if the user prefers dark mode.

@media (prefers-color-scheme: dark) {

Sweet. Anything inside here (the C in CSS stands for Cascading, remember) will override what comes before. Here's a few starter rules I changed. I was just changing stuff in the F12 tools inspector, and then collecting them back into my main CSS page. You can also use variables if you are an organized CSS person with a design system.

These are just a few, but you get the idea. Note the .line-tan example also where I say 'just put it back to it's initial value.' That's often a lot easier than coming up with "the opposite" value, which in this case would have meant generating some PNGs.

@media (prefers-color-scheme: dark) {

body {

color: #b0b0b0;

background-color: #101010;

}

.containerOuter {

background-color: #000;

color: #b0b0b0;

}

.blogBodyContainer {

background-color: #101010;

}

.line-tan {

background: initial;

}

#mainContent {

background-color: #000;

}

...snip...

}

Sweet. This change to my main css works for the http://hanselman.com main site. Let's do the blog now, which includes the 3rd party syntax highlighter. I use the same basic rules from my main site but then also had to (sorry CSS folks) be aggressive and overly !important with this very old syntax highlighter, like this:

@media (prefers-color-scheme: dark) {

.syntaxhighlighter {

background-color: #000 !important

}

.syntaxhighlighter .line.alt1 {

background-color: #000 !important

}

.syntaxhighlighter .line.alt2 {

background-color: #000 !important

}

.syntaxhighlighter .line {

background-color: #000 !important

}

...snip...

}

Your mileage may vary but it all depends on the tools. I wasn't able to get this working without the !important which I'm told is frowned upon. My apologies.

Detecting Dark Mode preferences with JavaScript

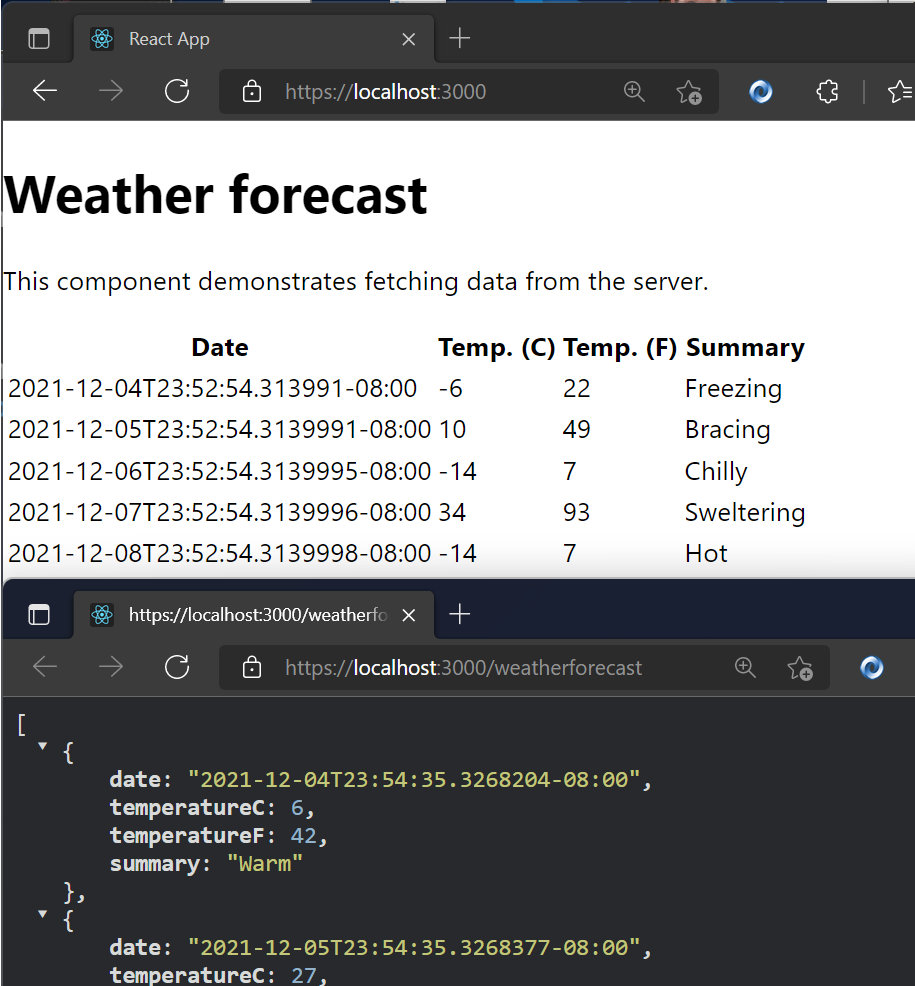

The third party control I use for my podcast is a like a lot of controls, it's an iFrame. As such, it takes some parameters as URL querystring parameters.

I generate the iFrame like this:

<iframe id='simpleCastPlayeriFrame'

title='Hanselminutes Podcast Player'

frameborder='0' height='200px' scrolling='no'

seamless src='https://player.simplecast.com/{sharingId}'

width='100%'></iframe>

If I add "dark=true" to the querystring, I'll get a different player skin. This is just one example, but it's common that 3rd party integrations will either want a queryString or a variable or custom CSS. You'll want to work with your vendors to make sure they not only care about dark mode (thanks Simplecast!) and that they have a way to easily enable it like this.

But this introduce some interesting issues. I need to detect the preference with JavaScript and make sure the right player gets loaded.

I'd also like to notice if the theme changes (light to dark or back) and dynamically change my CSS (that part happens automatically by the browser) and this player (that's gotta be done manually, because dark mode was invoked via a URL querystring segment.)

Here's my code. Again, not a JavaScript expert but this felt natural to me. If it's not super idiomatic or it just sucks, email me and I'll do an update. I do check for window.matchMedia to at least not freak out if an older browser shows up.

if (window.matchMedia) {

var match = window.matchMedia('(prefers-color-scheme: dark)')

toggleDarkMode(match.matches);

match.addEventListener('change', e => {

toggleDarkMode(match.matches);

})

function toggleDarkMode(state) {

let simpleCastPlayer = new URL(document.querySelector("#simpleCastPlayeriFrame").src);

simpleCastPlayer.searchParams.set("dark", state);

document.querySelector("#simpleCastPlayeriFrame").src= simpleCastPlayer.href;

}

}

toggleDarkMode is a method so I can use it for the initial state and the 'change' state. It uses the URL object because parsing strings is so 2000-and-late. I set the searchParams rather than .append because I know it's always set. I set it.

As I write this I supposed I could have stored the document.querySelector() like I did the matchMedia, but I just saw it now. Darn. Still, it works! So I #shipit.

I am sure I missed a page or two or a element or three so if you find a white page or a mistake, file it here https://github.com/shanselman/hanselman.com-bugs/issues and I'll take a look when I can.

All in all, a fun lunch hour. Thanks Stevö for the nudge!

Now YOU, Dear Reader can go update YOUR sites for both Light Mode and Dark Mode.

Sponsor: The No. 1 reason developers choose Couchbase? You can use your existing SQL++ skills to easily query and access JSON. That’s more power and flexibility with less training. Learn more.

© 2021 Scott Hanselman. All rights reserved.

I'm very much

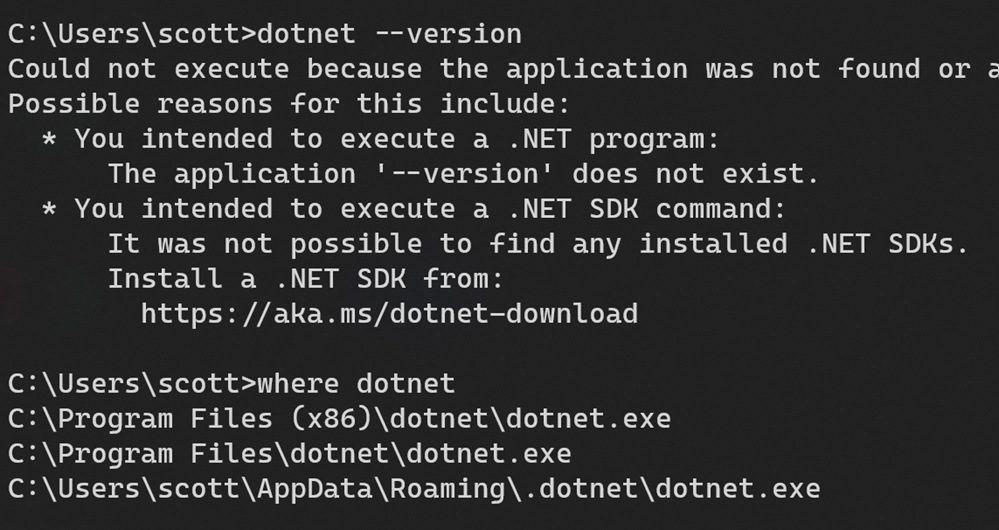

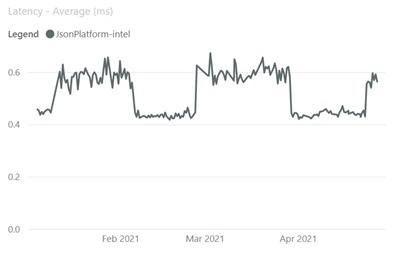

I'm very much  David and friends has a great repository filled with examples of "broken patterns" in ASP.NET Core applications. It's a fantastic learning resource with both markdown and code that covers a number of common areas when writing scalable services in ASP.NET Core. Some of the guidance is general purpose but is explained through the lens of writing web services.

David and friends has a great repository filled with examples of "broken patterns" in ASP.NET Core applications. It's a fantastic learning resource with both markdown and code that covers a number of common areas when writing scalable services in ASP.NET Core. Some of the guidance is general purpose but is explained through the lens of writing web services.