They are still working on the "dotnet new" templates, but you can also get cool templates from "yo aspnet" usingn Yeoman. The generator-aspnet package for Yeoman includes an empty web app, a console app, a few web app flavors, test projects, and a very simple Web API application that returns JSON and generally tries to be RESTful.

The startup.cs is pretty typical and basic. The Startup constructor sets up the Configuration with an appsettings.json file and add a basic Console logger. Then by calling "UseMvc()" we get to use all ASP.NET Core which includes both centralized routing and attribute routing. ASP.NET Core's controllers are unified now, so there isn't a "Controller" and "ApiController" base class. It's just Controller. Controllers that return JSON or those that return Views with HTML are the same so they get to share routes and lots of functionality.

public class Startup

{

public Startup(IHostingEnvironment env)

{

var builder = new ConfigurationBuilder()

.SetBasePath(env.ContentRootPath)

.AddJsonFile("appsettings.json", optional: true, reloadOnChange: true)

.AddJsonFile($"appsettings.{env.EnvironmentName}.json", optional: true)

.AddEnvironmentVariables();

Configuration = builder.Build();

}

public IConfigurationRoot Configuration { get; }

// This method gets called by the runtime. Use this method to add services to the container.

public void ConfigureServices(IServiceCollection services)

{

// Add framework services.

services.AddMvc();

}

// This method gets called by the runtime. Use this method to configure the HTTP request pipeline.

public void Configure(IApplicationBuilder app, IHostingEnvironment env, ILoggerFactory loggerFactory)

{

loggerFactory.AddConsole(Configuration.GetSection("Logging"));

loggerFactory.AddDebug();

app.UseMvc();

}

}

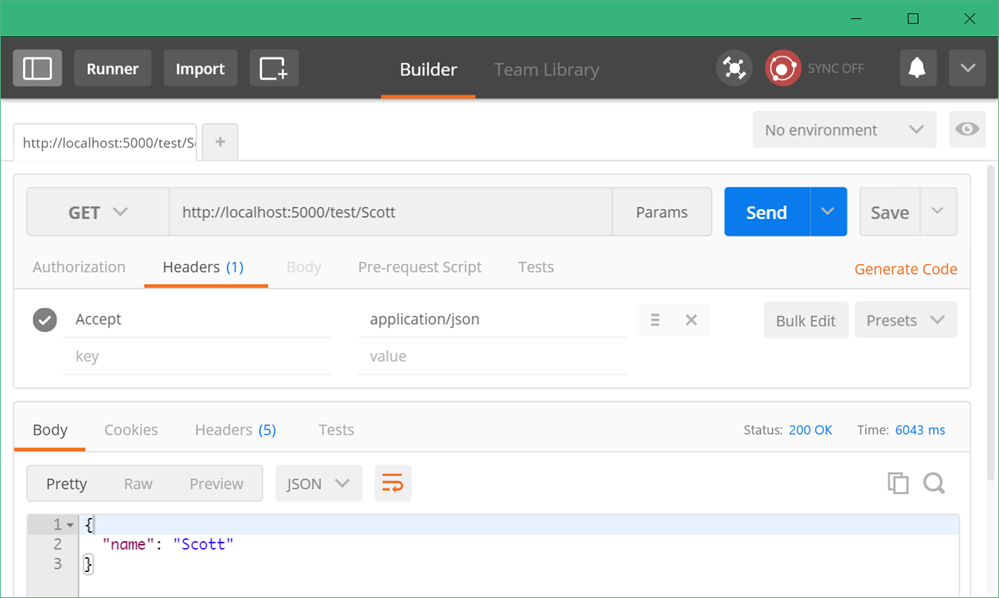

Then you can make a basic controller and use Attribute Routing to do whatever makes you happy. Just by putting [HttpGet] on a method makes that method the /api/Values default for a simple GET.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

namespace tinywebapi.Controllers

{

[Route("api/[controller]")]

public class ValuesController : Controller

{

// GET api/values

[HttpGet]

public IEnumerable<string> Get()

{

return new string[] { "value1", "value2" };

}

// GET api/values/5

[HttpGet("{id}")]

public string Get(int id)

{

return "value";

}

// POST api/values

[HttpPost]

public void Post([FromBody]string value)

{

}

// PUT api/values/5

[HttpPut("{id}")]

public void Put(int id, [FromBody]string value)

{

}

// DELETE api/values/5

[HttpDelete("{id}")]

public void Delete(int id)

{

}

}

}

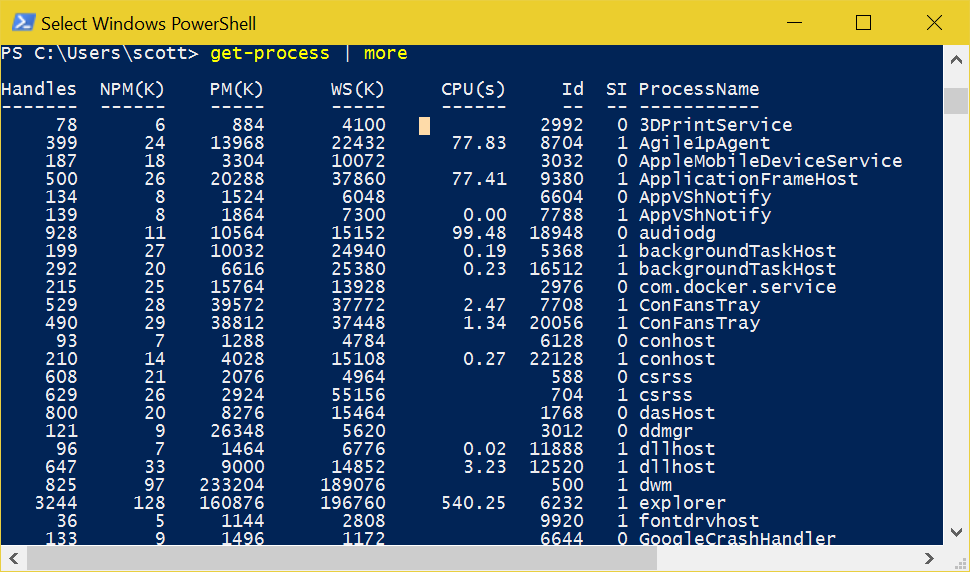

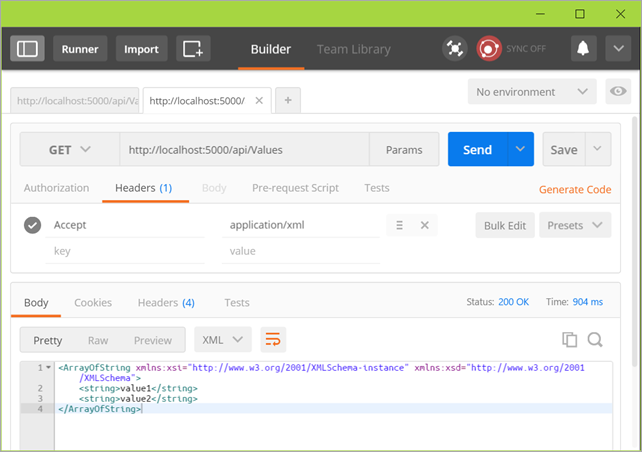

If we run this with "dotnet run" and call/curl/whatever to http://localhost:5000/api/Values we'd get a JSON array of two values by default. How would we (gasp!) add XML as a formatting/serialization option that would respond to a request with an Accept: application/xml header set?

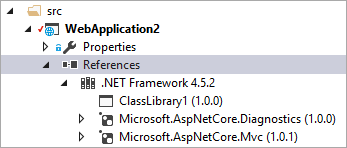

I'll add "Microsoft.AspNetCore.Mvc.Formatters.Xml" to project.json and then add one method to ConfigureServices():

services.AddMvc()

.AddXmlSerializerFormatters();

Now when I go into Postman (or curl, etc) and do a GET with Accept: application/xml as a header, I'll get the same object expressed as XML. If I ask for JSON, I'll get JSON.

If I like, I can create my own custom formatters and return whatever makes me happy. PDFs, vCards, even images.

Next post I'm going to explore the open source NancyFx framework and how to make minimal WebAPI using Nancy under .NET Core.

Sponsor: Thanks to Aspose for sponsoring the feed this week! Aspose makes programming APIs for working with files, like: DOC, XLS, PPT, PDF and countless more. Developers can use their products to create, convert, modify, or manage files in almost any way. Aspose is a good company and they offer solid products. Check them out, and download a free evaluation!

© 2016 Scott Hanselman. All rights reserved.

I'm a big fan of keyboard shortcuts.

I'm a big fan of keyboard shortcuts.