My buddy Greg and I are getting ready to launch our little side startup, and I was going through our product backlog. Our app consists of a global cloud service with Signalr, an iPhone app made with Xamarin tools, and a WPF app.

My buddy Greg and I are getting ready to launch our little side startup, and I was going through our product backlog. Our app consists of a global cloud service with Signalr, an iPhone app made with Xamarin tools, and a WPF app.

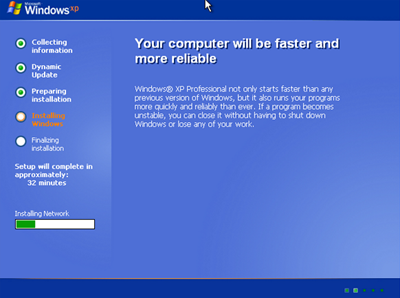

One of the items in our Trello backlog was "Support Windows XP. Gasp!"

I hadn't given this item much thought, but I figure it was worth a few hours look. If it was easy, why not, right?

Our WPF desktop application was written for .NET 4.5, which isn't supported on Windows XP. I want to my app to support as basic and mainstream a .NET 4 installation as possible.

Could I change my app to target .NET 4 directly? I use the new async and await features extensively.

Well, of course, I remembered Microsoft released the Async Targeting Pack (Microsoft.Bcl.Async) through NuGet to do just this. In fact, if I was targeting .NET 3.5 I could use Omer Mor's AsyncBridge for .NET 3.5, so it's good that I have choices.

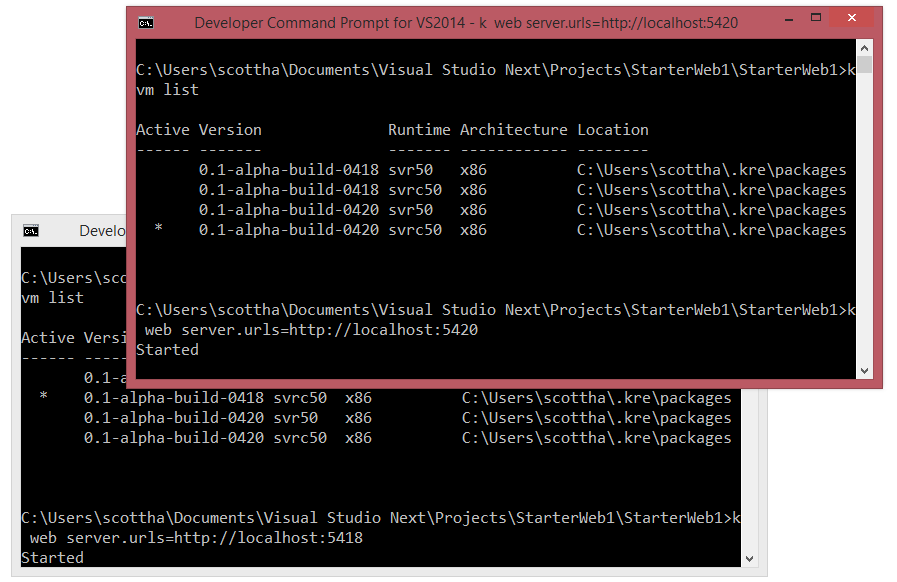

I changed my project to target .NET 4, rather than 4.5, installed these NuGets, and recompiled. No problem, right?

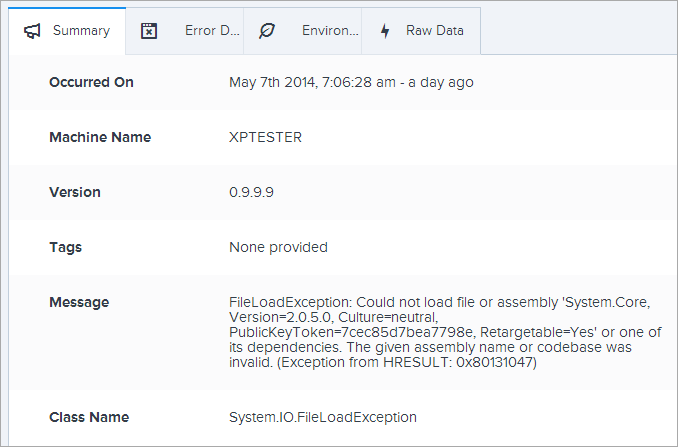

However, when I run my application on Windows XP it crashes immediately. Fortunately I have instrumented it with Raygun.io so all my crashes to to the cloud for analysis. It gives me this nice summary:

Here's the important part:

FileLoadException: Could not load file or assembly

'System.Core, Version=2.0.5.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e, Retargetable=Yes'

or one of its dependencies. The given assembly name or codebase was invalid.

(Exception from HRESULT: 0x80131047)

That's weird, I'm using .NET 4 which includes System.Core version 4.0. I can confirm what's in the GAC (Global Assembly Cache) with this command at the command line. Remember, your computer isn't a black box.

C:\>gacutil /l | find /i "system.core"

System.Core, Version=3.5.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089, processorArchitecture=MSIL

System.Core, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089, processorArchitecture=MSIL

OK, so there isn't even a System.Core version 2.0.5 in the GAC. Turns out that System.Core 2.0.5 is the Portable Libraries version, meant to be used everywhere (that means, Silverlight, etc, everywhere) so they made the version number compatible.

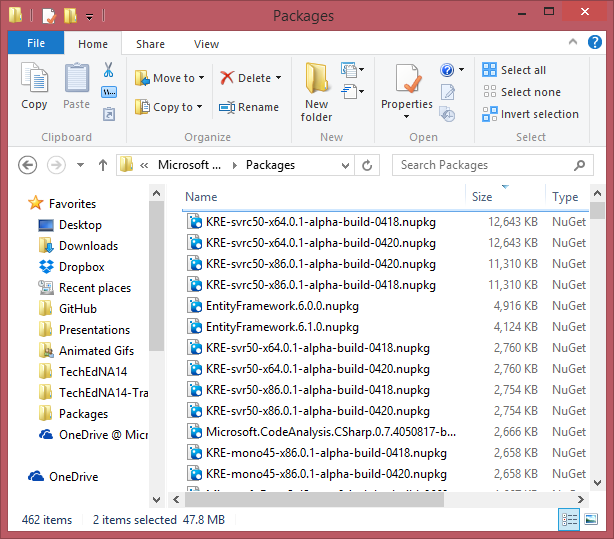

Because we're building our iPhone app with Xamarin tools and we anticipate supporting other platforms, we use a Portable Library to share code. But, it seems that support for Portable Libraries were enabled on .NET 4 vanilla by the KB2468871 update.

I don't want to require any specific patch level or hotfixes. While this .NET 4 framework update was pushed to machines via Windows Update, for now I want to support the most basic install if I can. So if the issue is Portable Libraries (which I still want to use) then I'll want to bring those shared files in another way.

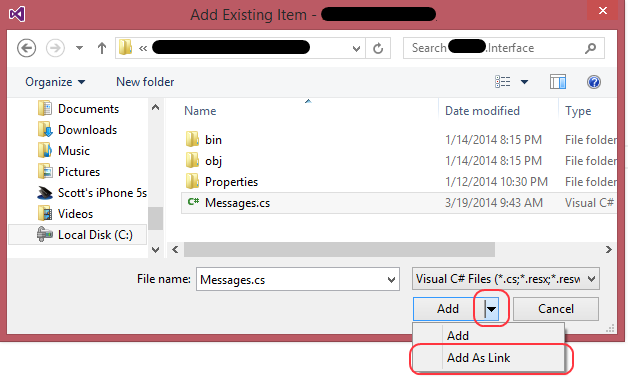

You can LINK source code in Visual Studio when you Add File by clicking the little dropdown and then Add as Link:

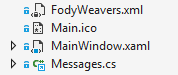

Now my Messages.cs file is a link. See the little shortcut overlay in blue?

I removed the project reference to the Portable Library for this WPF application and brought the code in this way. I'm still sharing core, but just not as a binary for this one application.

Recompile and redeploy and magically .NET 4 WPF application with async/await and MahApps.Metro styling starts up and runs wonderfully on this 12 year old OS with just .NET 4 installed.

For our application this means that my market just got opened up a little land now I can sell my product to the millions of pirated and forever unpatched Windows XP machines in the world. Which is a good thing.

Sponsor: Big thanks to Aspose for sponsoring the blog feed this week. Aspose.Total for .NET has all the APIs you need to create, manipulate and convert Microsoft Office documents and a host of other file formats in your applications. Curious? Start a free trial today.

© 2014 Scott Hanselman. All rights reserved.

![pnggauntlet-screen-a0eadac6[1] pnggauntlet-screen-a0eadac6[1]](http://www.hanselman.com/blog/content/binary/Windows-Live-Writer/9c8948447812_144F5/pnggauntlet-screen-a0eadac6%5B1%5D_280de89c-4165-49a3-a273-4f042318c182.png)

What's interesting about this, to me, is that these "control/concepts" (my term) are coded at a high level but rendered as their native counterparts. So a "tab" in my code is expressed in its most specific and native counterpart on the mobile device, rather than as a generic tab control as in my Java example. Let's see an example.

What's interesting about this, to me, is that these "control/concepts" (my term) are coded at a high level but rendered as their native counterparts. So a "tab" in my code is expressed in its most specific and native counterpart on the mobile device, rather than as a generic tab control as in my Java example. Let's see an example.

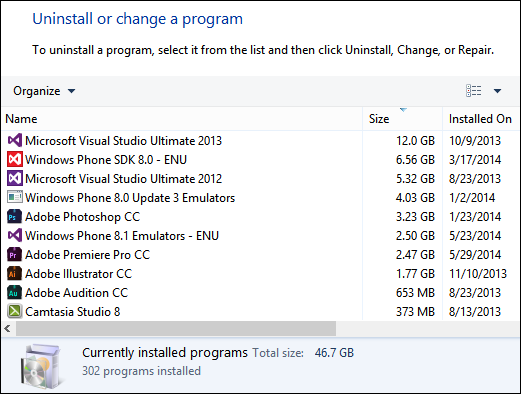

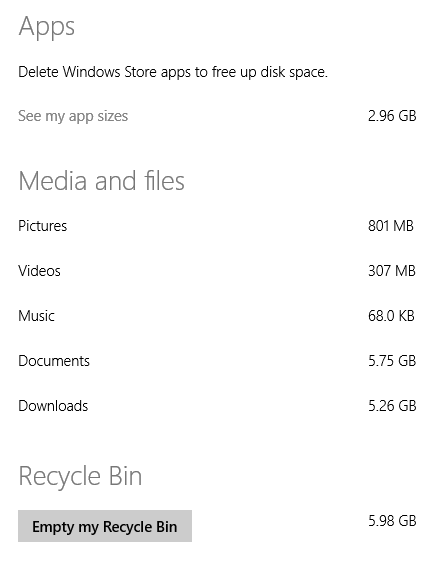

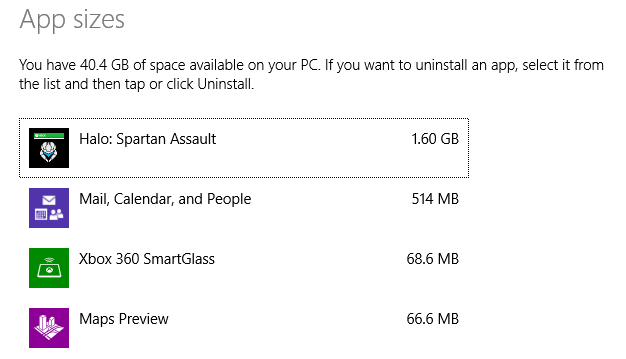

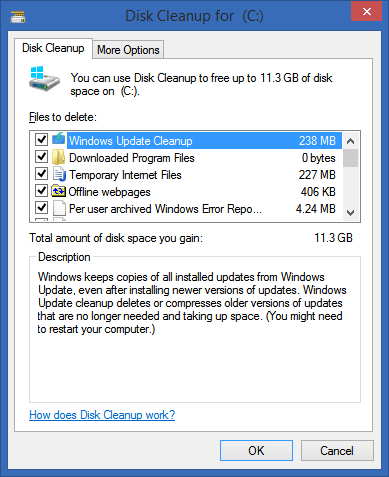

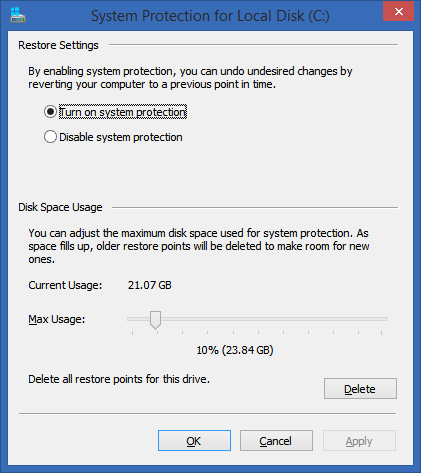

This is an "updated for Windows 8.1" version of my popular original article

This is an "updated for Windows 8.1" version of my popular original article

Delete your Browser Cache - Whether you use Chrome, IE or Firefox, your browser is saving probably a gig or more of temporary files. Consider clearing it out manually (or use the CCleaner mentioned below) occasionally or move the cache from your browser's settings to another drive with more space.

Delete your Browser Cache - Whether you use Chrome, IE or Firefox, your browser is saving probably a gig or more of temporary files. Consider clearing it out manually (or use the CCleaner mentioned below) occasionally or move the cache from your browser's settings to another drive with more space. %5D%20(92)_thumb.png)