First, a disclaimer. Don't do this. I did this to test a theory and to prove a point. ASP.NET Core and the .NET Core that it runs on are open source and run pretty much anywhere. I wanted to see if I could run an ASP.NET Core site on GoDaddy's cheapest hosting ($3, although it scales to $8) that basically supports only PHP. It's not a full Linux VM. It's locked-down and limited. You don't have root. You are missing most tools you'd expect you'd have.

BUT.

I wanted to see if I could get ASP.NET Core running on it anyway. Maybe if I do, they (and other inexpensive hosts) will talk to the .NET team, learn that ASP.NET Core is open source and could easily run on their existing infrastructure.

AGAIN: Don't do this. It's hacky. It's silly. But it's hella cool. IMHO. Also, big thanks to Tomas Weinfurt for his help!

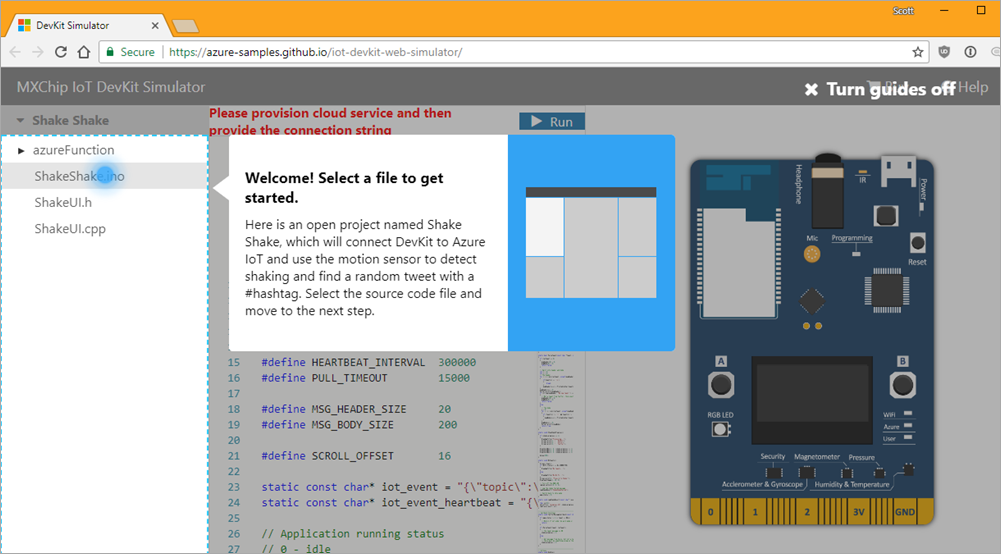

First, I went to GoDaddy and signed up for their cheap hosting. Again, not a VM, but their shared one. I also registered supercheapaspnetsite.com as well. They use a cPanel-based web management system that doesn't really let you do anything. You can turn on SSH, do some PHP stuff, and generally poke around, but it's not exactly low-level.

First I ssh (shoosh!) in and see what I'm working with. I'm shooshing with Ubuntu on Windows 10 feature, that every developer should turn on. It's makes it really easy to work with Linux hosts if you're starting from Linux on Windows 10.

secretname@theirvmname [/proc]$ cat version

Linux version 2.6.32-773.26.1.lve1.4.46.el6.x86_64 (mockbuild@build.cloudlinux.com) (gcc version 4.4.7 20120313 (Red Hat 4.4.7-18) (GCC) ) #1 SMP Tue Dec 5 18:55:41 EST 2017

secretname@theirvmname [/proc]$

OK, looks like Red Hat, so CentOS 6 should be compatible.

I'm going to use .NET Core 2.1 (which is in preview now!) and get the SDK at https://www.microsoft.com/net/download/all and install it on my Windows machine where I will develop and build the app. I don't NEED to use Windows to do this, but it's the laptop I have and it's also nice to know I can build on Windows but target CentOS/RHEL6.

Next I'll make a new ASP.NET site with

dotnet new razor

and then I'll publish a self-contained version like this:

dotnet publish -r rhel.6-x64

And those files will end up in a folder like \supercheapaspnetsite\bin\Debug\netcoreapp2.1\rhel.6-x64\publish\

NOTE: You may need to add the NuGet feed for the dailies for this .NET Core preview in order to get the RHEL6 runtime downloaded during this local publish.

Then I used WinSCP (or whatever FTP/SCP client you like, rsync, etc) to get the files over to the ~/www folder on your GoDaddy shared site. Then I

chmod +x ./supercheapasnetsite

to make it executable. Now, from my ssh session at GoDaddy, let's try to run my app!

secretname@theirvmname [~/www]$ ./supercheapaspnetsite

Failed to load hb, error: libunwind.so.8: cannot open shared object file: No such file or directory

Failed to bind to CoreCLR at '/home/secretname/public_html/libcoreclr.so'

Of course it couldn't be that easy, right? .NET Core wants the unwind library (shared object) and it doesn't exist on this locked down system.

AND I don't have yum/apt/rpm or a way to install it right?

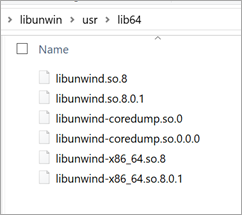

I could go looking for tar.gz file somewhere like this http://download.savannah.nongnu.org/releases/libunwind/ but I need to think about versions and make sure things line up. Given that I'm targeting CentOS6, I should start here https://centos.pkgs.org/6/epel-x86_64/libunwind-1.1-3.el6.x86_64.rpm.html and download libunwind-1.1-3.el6.x86_64.rpm.

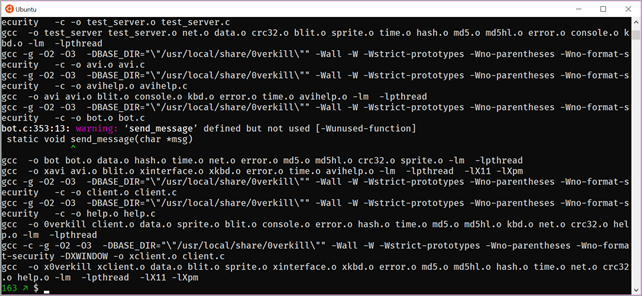

I need to crack open that rpm file and get the library. RPM packages are just headers on top of a CPIO archive, so I can apt-get install rpm2cpio from my local Ubuntu instances (on Windows 10). Then from /mnt/c/users/scott/Downloads (where I downloaded the file) I will extract it.

rpm2cpio ./libunwind-1.1-3.el6.x86_64.rpm | cpio -idmv

There they are.

![image image]()

This part is cool. Even though I have these files, I don't have root or any way to "install" them. However I could either export/use the LD_LIBRARY_PATH environment variable to control how libraries get loaded OR I could put these files in $ORIGIN/netcoredeps. You can read more about Self Contained Linux Applications on .NET Core here.

The main executable of published .NET Core applications (which is the .NET Core host) has an RPATH property set to $ORIGIN/netcoredeps. That means that when Linux shared library loader is looking for shared libraries, it looks to this location before looking to default shared library locations. It is worth noting that the paths specified by the LD_LIBRARY_PATHenvironment variable or libraries specified by the LD_PRELOAD environment variable are still used before the RPATH property. So, in order to use local copies of the third-party libraries, developers need to create a directory named netcoredeps next to the main application executable and copy all the necessary dependencies into it.

At this point I've added a "netcoredeps" folder to my public folder, and then copied it (scp) over to GoDaddy. Let's run it again.

secretname@theirvmname [~/www]$ ./supercheapaspnetsite

FailFast: Couldn't find a valid ICU package installed on the system. Set the configuration flag System.Globalization.Invariant to true if you want to run with no globalization support.

at System.Environment.FailFast(System.String)

at System.Globalization.GlobalizationMode.GetGlobalizationInvariantMode()

at System.Globalization.GlobalizationMode..cctor()

at System.Globalization.CultureData.CreateCultureWithInvariantData()

at System.Globalization.CultureData.get_Invariant()

at System.Globalization.CultureInfo..cctor()

at System.StringComparer..cctor()

at System.AppDomain.InitializeCompatibilityFlags()

at System.AppDomain.Setup(System.Object)

Aborted

Ok, now it's complaining about ICU packages. These are for globalization. That is also mentioned in the self-contained-linux apps docs and there's a precompiled binary I could download. But there's options.

If your app doesn't explicitly opt out of using globalization, you also need to add libicuuc.so.{version}, libicui18n.so.{version}, and libicudata.so.{version}

I like "opt-out" so I don't have to go dig these ups (although I could) so I can either set the CORECLR_GLOBAL_INVARIANT env var to 1, or I can add System.Globalization.Invariant = true to supercheapaspnetsite.runtimeconfig.json, which I'll do with just to be obnoxious. ;)

When I run it again I get another complained about libuv. Yet another shared library that isn't installed on this instance. I could go get it and put it in netcoredeps OR since I'm using the .NET Core 2.1, I could try something new. There were some improvements made in .NET Core 2.1 around sockets and http performance. On the client side, these new managed libraries are written from the ground up in managed code using the new high-performance Span<T> and on the server-side I could use Kestrel's (Kestrel is the .NET Core webserver) experimental UseSockets() as they are starting to move that over.

In other words, I can bypass libuv usage entirely by changing my Program.cs to use the use UseSockets() like this.

public static IWebHostBuilder CreateWebHostBuilder(string[] args) =>

WebHost.CreateDefaultBuilder(args)

.UseSockets()

.UseStartup<Startup>();

Let's run it again. I'll add the ASPNETCORE_URLS environment variable and set it to a high port like 8080. Remember, I'm not admin so I can't use any port under 1024.

secretname@theirvmname [~/www]$ export ASPNETCORE_URLS="http://*:8080"

secretname@theirvmname [~/www]$ ./supercheapaspnetsite

Hosting environment: Production

Content root path: /home/secretname/public_html

Now listening on: http://0.0.0.0:8080

Application started. Press Ctrl+C to shut down.

Holy crap it actually started.

Ok, but I can't access it from supercheapaspnetsite.com:8080 because this is GoDaddy's locked down managed shared hosting. I can't just open a port or forward a port in their control panel.

But. They use Apache, and that has the .htaccess file!

Could I use mod_proxy and try this?

ProxyPassReverse / http://127.0.0.1:8080/

Looks like no, they haven't turned this on. Likely they don't want to proxy off to external domains, but it'd be nice if they allowed localhost. Bummer. So close.

Fine, I'll proxy the traffic myself. (Not perfect, but this is all a spike)

RewriteRule ^(.*)$ "show.php" [L]

Cool, now a cheesy proxy goes in show.php.

<?php

$site = 'http://127.0.0.1:8080';

$request = $_SERVER['REQUEST_URI'];

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $site . $request);

curl_setopt($ch, CURLOPT_HEADER, TRUE);

$f = fopen("headers.txt", "a");

curl_setopt($ch, CURLOPT_VERBOSE, 0);

curl_setopt($ch, CURLOPT_STDERR, $f);

#don't output curl response, I need to strip the headers.

#yes I know I can just CURLOPT_HEADER, false and all this

# goes away, but for testing we log headers

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, true);

$hold = curl_exec($ch);

#strip headers

$header_size = curl_getinfo($ch, CURLINFO_HEADER_SIZE);

$headers = substr($hold, 0, $header_size);

$response = substr($hold, $header_size);

$headerArray = explode(PHP_EOL, $headers);

echo $response; #echo ourselves. Yes I know curl can do this for us.

?>

Cheesy, yes. Works for GET? Also, yes. This really is Apache's job, not ours, but kudos to Tomas for this evil idea.

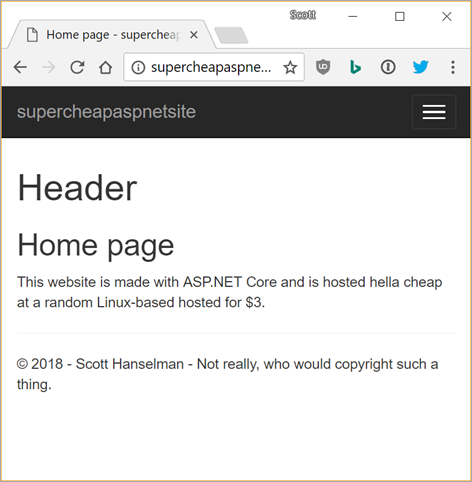

![An ASP.NET Core app at a host that doesn't support it An ASP.NET Core app at a host that doesn't support it]()

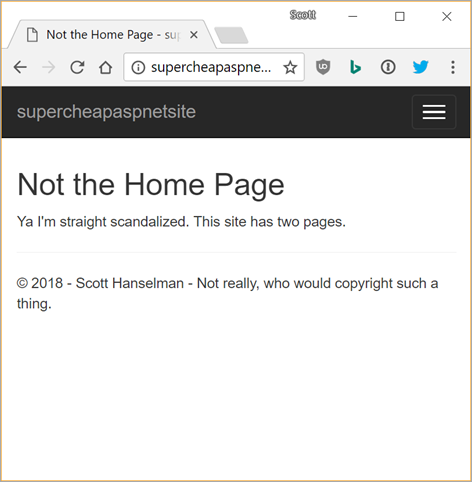

Boom. How about another page at /about? Yes.

![Another page with ASP.NET Core at a host that doesn't support it Another page with ASP.NET Core at a host that doesn't support it]()

Lovely. But I had to run the app myself. I have no supervisor or process manager (again this is already handled by GoDaddy for PHP but I'm in unprivileged world.) Shooshing in and running it is a bad idea and not sustainable. (Well, this whole thing is not sustainable, but still.)

We could copy "screen" over and start it up and detach like use screen ./supercheapaspnet app, but again, if it crashes, no one will start it. We do have crontab, so for now, we'll launch the app on a schedule occasionally to do a health check and if needed, keep it running. Also added a few debugging tools in ~/bin:

secretname@theirvmname [~/bin]$ ll

total 304

drwxrwxr-x 2 4096 Feb 28 20:13 ./

drwx--x--x 20 4096 Mar 1 01:32 ../

-rwxr-xr-x 1 150776 Feb 28 20:10 lsof*

-rwxr-xr-x 1 21816 Feb 28 20:13 nc*

-rwxr-xr-x 1 123360 Feb 28 20:07 netstat*

All in all, not that hard. ASP.NET Core and .NET Core underneath it can run pretty much anywhere, just like PHP, Python, whatever.

If you're a host and you want to talk to someone at Microsoft about setting up ASP.NET Core shared hosting, email Sourabh.Shirhatti@microsoft.com and talk to them! If you are GoDaddy, I apologize, and you should also email. ;)

Sponsor: Get the latest JetBrains Rider for debugging third-party .NET code, Smart Step Into, more debugger improvements, C# Interactive, new project wizard, and formatting code in columns.

© 2017 Scott Hanselman. All rights reserved.

I continue to

I continue to

I've put Dashcams in both my car and my wife's car. It's already captured two accidents: one where I was rear-ended and one where someone fell asleep as they were driving a few cars ahead of me on the freeway.

I've put Dashcams in both my car and my wife's car. It's already captured two accidents: one where I was rear-ended and one where someone fell asleep as they were driving a few cars ahead of me on the freeway.

I recently got a updated laptop for work, a

I recently got a updated laptop for work, a

Battery Life on my Surface Pro 3 was "fine." You know? Fine. It wasn't amazing. Maybe 4-6 hours depending. However, the new Battery Slider on Windows 10 Creators Edition really makes simple and measurable difference. You can see the CPU GHz and brightness ratchet up and down. I set it to Best battery life and it'll go 8+ hours easy. CPU will hang out around 0.85 GHz and I can type all day at 40% brightness. Then I want to compile, I pull it up to bursts of 3.95 Ghz and take care of business.

Battery Life on my Surface Pro 3 was "fine." You know? Fine. It wasn't amazing. Maybe 4-6 hours depending. However, the new Battery Slider on Windows 10 Creators Edition really makes simple and measurable difference. You can see the CPU GHz and brightness ratchet up and down. I set it to Best battery life and it'll go 8+ hours easy. CPU will hang out around 0.85 GHz and I can type all day at 40% brightness. Then I want to compile, I pull it up to bursts of 3.95 Ghz and take care of business.

I've mentioned

I've mentioned

The Azure CLI 2.0 (Command line interface) is a clean little command line tool to query the Azure back-end APIs (which are JSON). It's easy to install and cross-platform:

The Azure CLI 2.0 (Command line interface) is a clean little command line tool to query the Azure back-end APIs (which are JSON). It's easy to install and cross-platform:

My

My