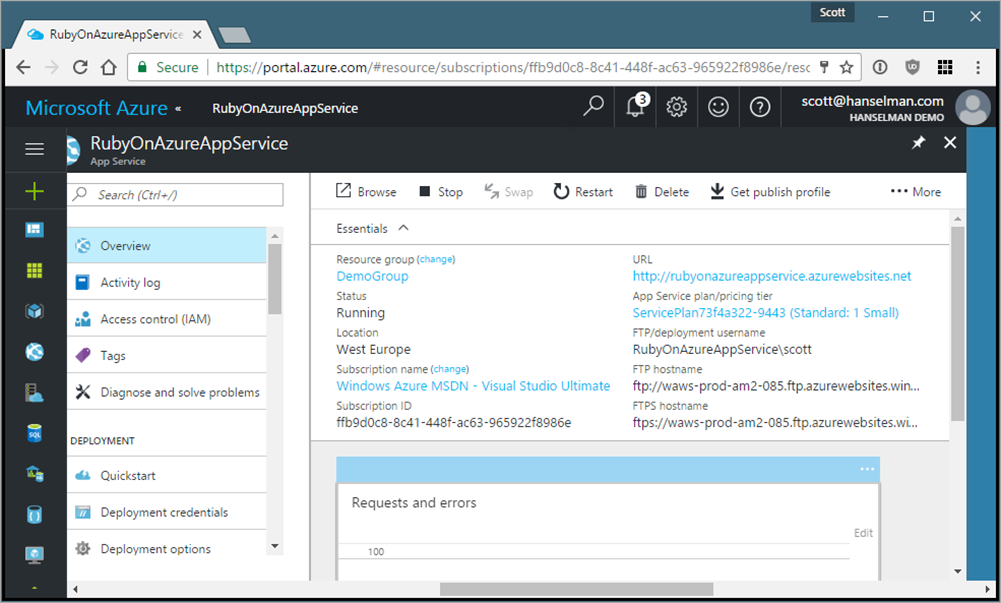

I just discovered that you can see a preview (almost like a daily build) of the Azure Portal if you go to https://preview.portal.azure.com instead of https://portal.azure.com. Sometimes the changes are big, sometimes they are subtle. It feels faster to me.

A few days ago I blogged that I had found a number of things in Azure that I wasn't previously aware of like "Metrics per instance (App Service)" which is DEEPLY useful if you run more than one Web App inside an App Service Plan. Remember, an App Service Plan is basically a VM and you can run as many Websites, docker containers, Azure Functions, Mobile Apps, Api Apps, Logic apps, and whatever you can fit in there. Density is the word of the day.

Azure App Service Secrets and Hidden Gems

A bunch of folks agreed that there were some real hidden gems worth exploring so I thought I'd take a moment and do just that. Here's a few of the things that I'm continuously amazed are included for free with App Service.

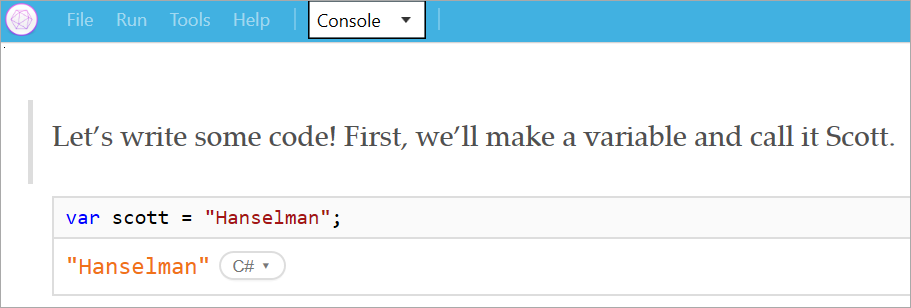

Console

There's a web-based console that you can access from the Azure Portal to explore your apps!

This is basically an HTML 5 bash prompt. I find it useful to double check the contents of certain files in Production, and confirm environment variables are set. I also, for some reason, find it comforting to see that my "cloud web site" actually lives on Drive D:. It calms me to know the Cloud has a D Drive.

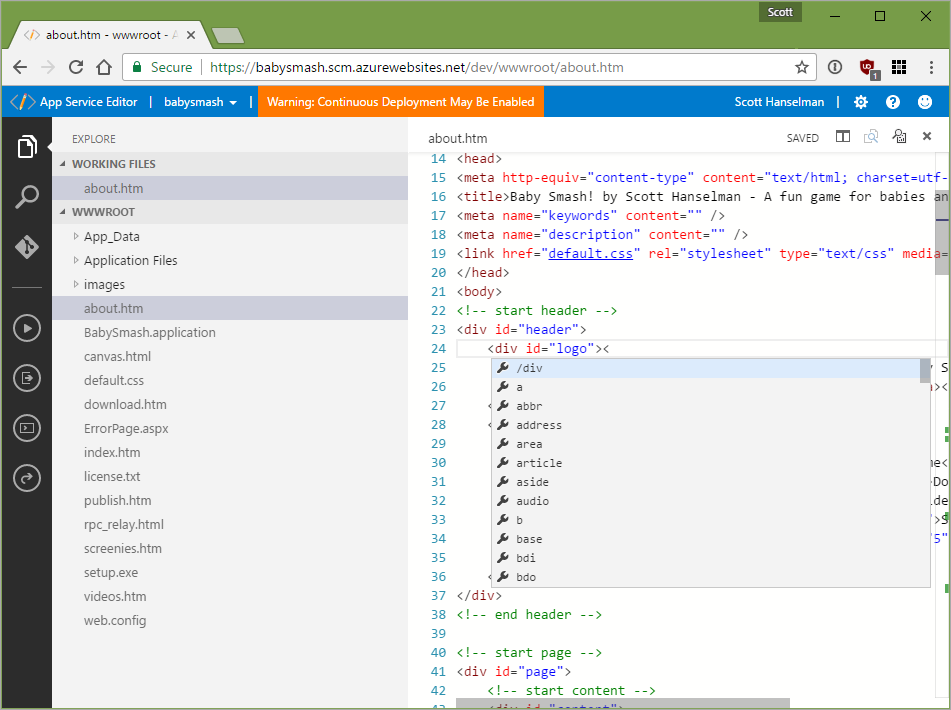

App Service Editor

App Service Editor is the editor that's codenamed "Monaco" that powers Visual Studio Code. It's amazing and few people know about it. I use it to make quick updates to production, although you do need to be aware if you have Continuous Deployment enabled that your changes will get eventually overwritten.

Testing in Production - (A/B Testing)

This is an amazing feature that not enough people know about. So, I'm assuming you are aware of Staging Slots? These are things like dev-, test-, or staging- that you can pull from a different branch during CI/CD, or just a separate but near-identical website that runs on the same hardware. The REAL magic is the Testing in Production feature.

Once you have a slot - I have one here for the Staging Site for BabySmash - you have the option to just "swap" between staging and production...OR...you can set a percentage of traffic you want to go to each slot!

Note that traffic is pinned to a slot for the life of a client session, so you don't have to worry about folks bouncing around if you change the UI or something.

Why is this insanely powerful? You can even make - for example - a "beta" slot and have your customers opt-in to a beta! And you don't have to write any code to enable this! MyApp.com/?x-ms-routing-name=beta would get them there and MyApp.com?x-ms-routing-name=self always points to Production.

You could also write a PowerShell script that would slowly move traffic in increments. That way you could ramp up traffic to staging from 5% to 100% - assuming you see no errors or issues.

$siteName = "yourProductionSiteName"

$rule1 = New-Object Microsoft.WindowsAzure.Commands.Utilities.Websites.Services.WebEntities.RampUpRule

$rule1.ActionHostName = "yourSlotSiteName"

$rule1.ReroutePercentage = 10;

$rule1.Name = "stage"

$rule1.ChangeIntervalInMinutes = 10;

$rule1.ChangeStep = 5;

$rule1.MinReroutePercentage = 5;

$rule1.MaxReroutePercentage = 50;

$rule1.ChangeDecisionCallbackUrl = "callBackUrlOfyourChoice-OptionalThatDecidesIfYouShoudlKeepGoing"

Set-AzureWebsite $siteName -Slot Production -RoutingRules $rule1

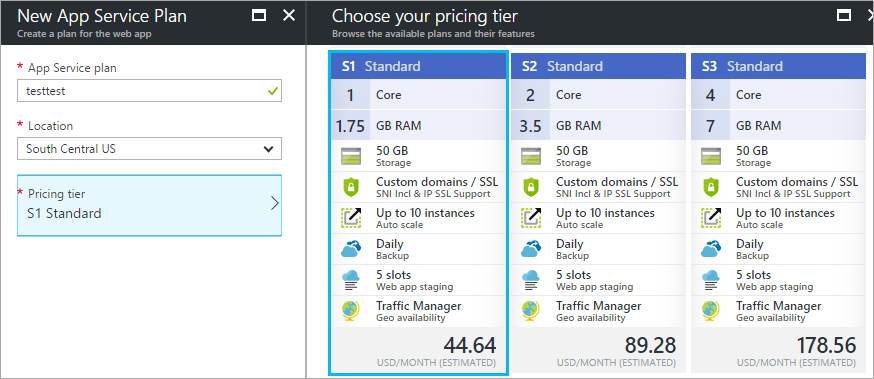

All this stuff is built-in to the Standard Azure AppServicePlan.

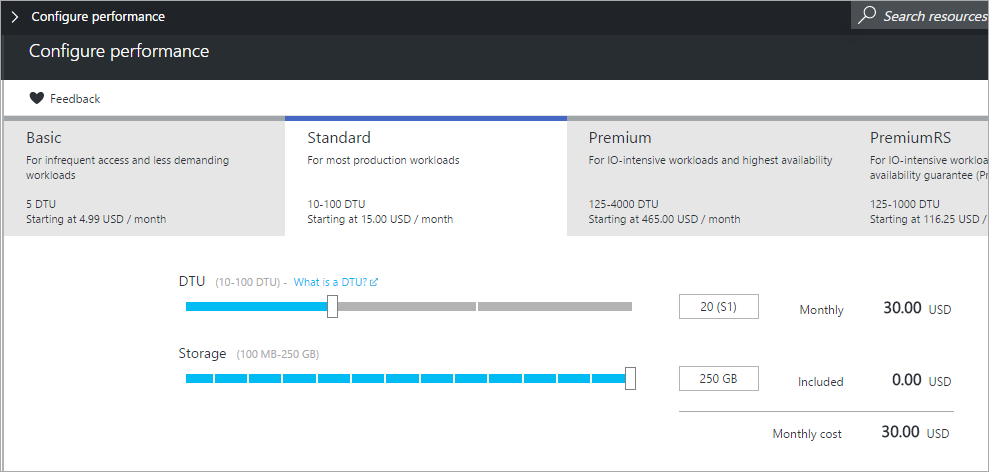

Easy and Cheap Databases

A number of folks in the comments of my last post asked about the 20 websites I have running on my single App Service Plan. Some felt I may have been disingenuous about the pricing and assumed I have a bunch of SQL Server databases behind my sites, or that a site can't be useful without a SQL Server.

There's a few things there to answer. My sites are many different techs, Node.js, Ruby, C# and ASP.NET MVC, and static sites. For example:

- Running the Ruby Middleman Static Site Generator on Microsoft Azure runs in the cloud when I check code into GitHub but deploys a static site.

- The Hanselminutes Podcast uses WebMatrix and ASP.NET WebPage's "SQL Compact Edition." This database runs out of a single file that's stored locally.

- One of my node.js sites uses SQL Lite for its data.

- One ASP.NET application uses "Azure MySQL in-app" that is also included in Azure App Service. You get a single modest MySQL database that runs in the context of your App Service. It's not super fast and meant for development, but with a little caching it's very workable.

- One node.js app thinks it is talking MongoDB but actually it's talking via MongoDB protocol support in Azure DocumentDB. You can create an Azure noSQL DocumentDB and point any app that speaks Mongo to it and it Just Works.

There's a number of options, including Easy Tables for your Mobile Apps. Check out http://mobile.azure.com to learn more about how you can get a VERY quick and easy backend for mobile (or web) apps.

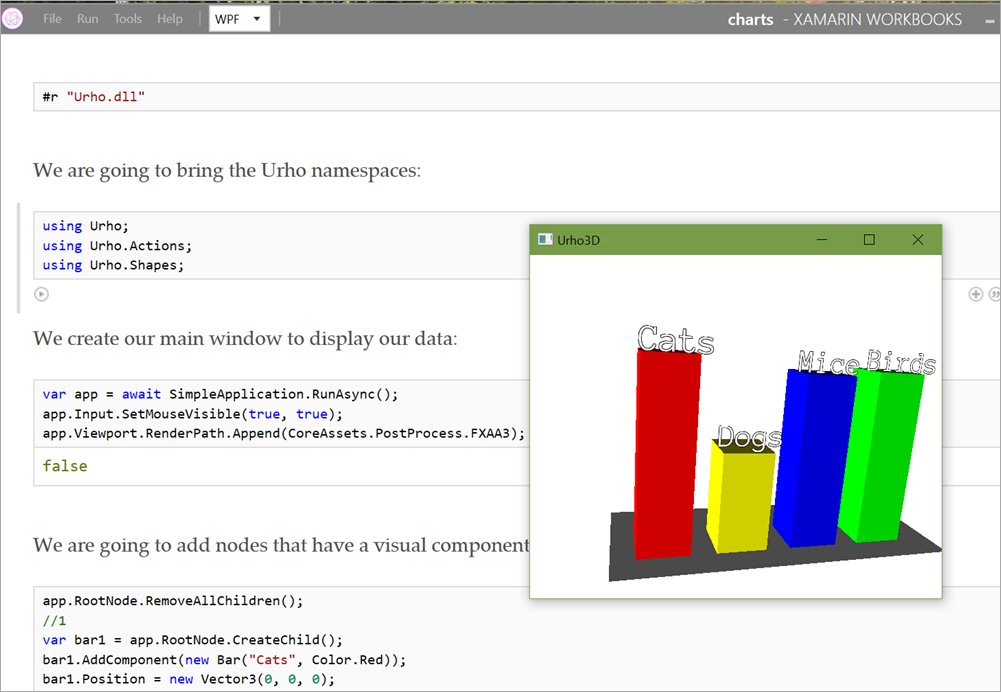

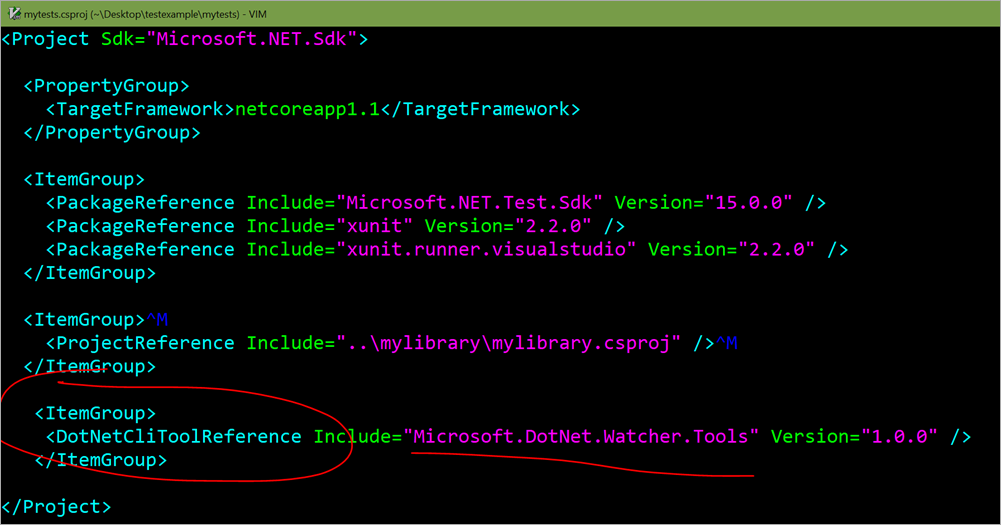

Azure App Service Extensions

If you have used Git deploy to an Azure App Service, you likely noticed a "Sidecar" website that your app has. I have babysmash.com which is actually babysmash.azurewebsites.net, right? There's also babysmash.scm.azurewebsites.net that you can't access. That sidecar site (when I'm authenticated) has a ton of easy REST GET APIs I can call to get my process list, files, deployments, and lots more. This is all powered by Kudu, which is open source by the way.

Kudu's sidecar site is a "site extension." You can not only write your own Azure Site Extension (they are just NuGet packages!) but it turns out there are a TON of useful already vetted and published extensions you can add to your site today. Those extensions live at http://www.siteextensions.net but you add them directly from the Azure Portal. There's 84 at the time of this blog post.

Azure Site Extensions include:

- phpMyAdmin - for Admin of MySQL over the web

- Azure Let's Encrypt - Easy install of Let's Encrypt SSL certs!

- Image Optimizer - Automatic squishing of your site's JPGs and PNGs because you know you forgot!

- GoLang Support - Azure doesn't officially support Go in Azure Web Apps...but with this extension it works fine!

- Jekyll - Easy static site generation in Azure

- Brotli HTTP Compression

You get the idea.

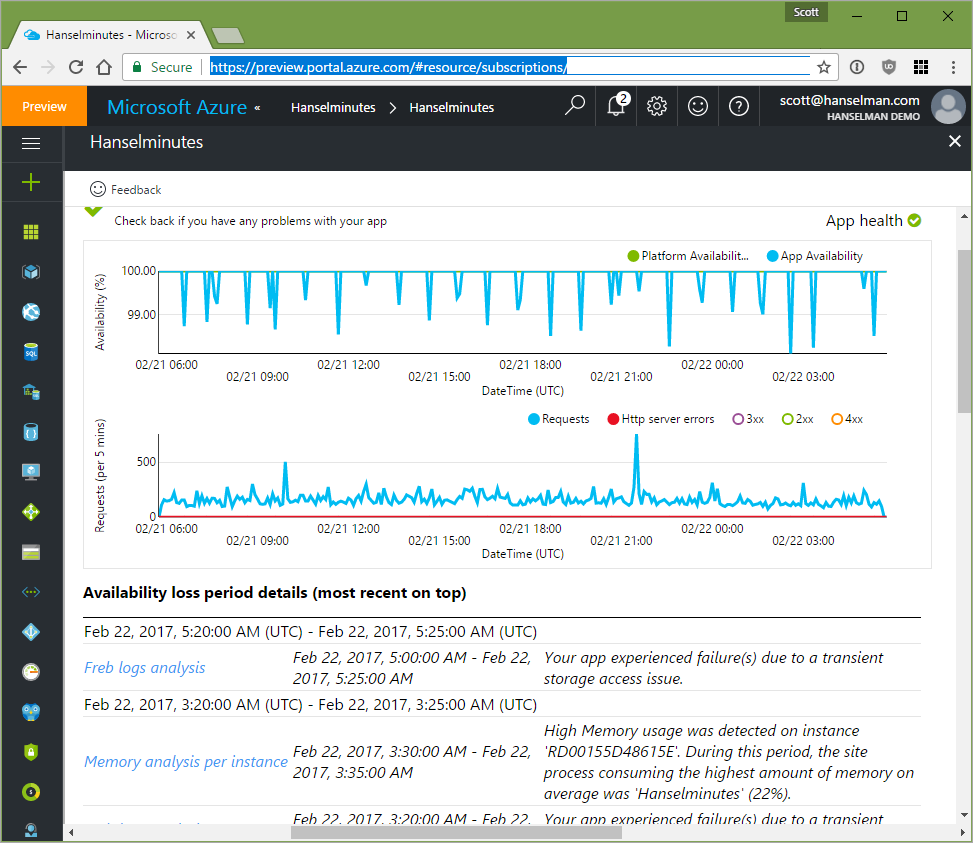

Diagnostics

I just discovered this "uptime" blade within my Web Apps in the Azure Portal. It tells me my app's uptime and if it's not 100%, it tells my why not and when!

Again, none of this stuff costs extra. You can add Site Extensions or explore your apps to the limit of the underlying App Service Plan. I'm doing all this on a single Standard 1 (S1) App Service Plan.

Sponsor: Excited about the future in ASP.NET? The folks at Progress held an awesome webinar which gives a 360⁰ view of the new ASP.NET Core and how it compares to WebForms and MVC. Watch it now on demand!

© 2016 Scott Hanselman. All rights reserved.

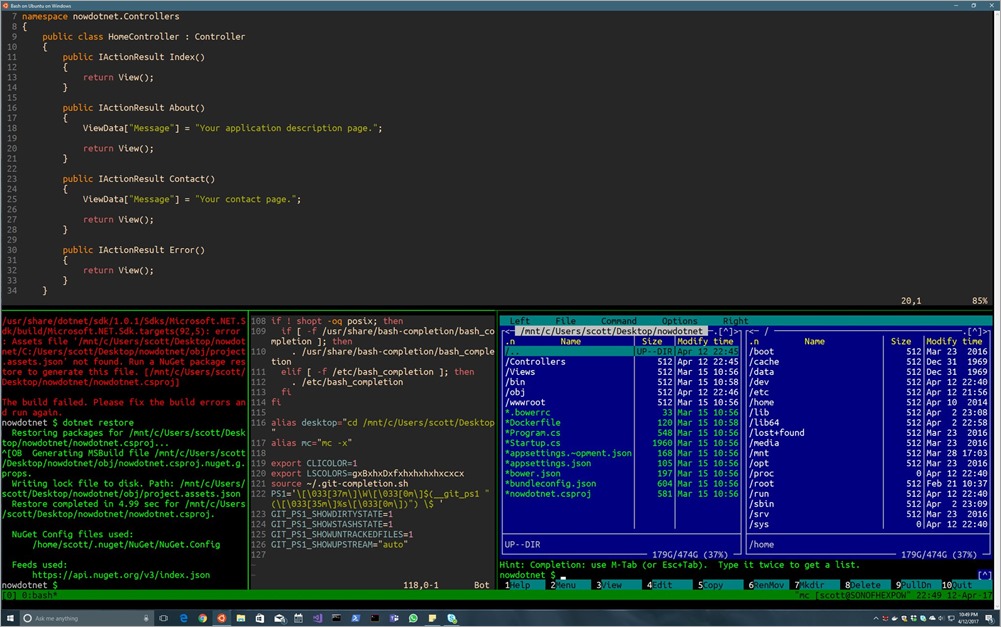

I'm a huge fan of the command line, and sometimes I feel like Windows people are

I'm a huge fan of the command line, and sometimes I feel like Windows people are

I'm setting a goal for myself to finish my half-finished book

I'm setting a goal for myself to finish my half-finished book

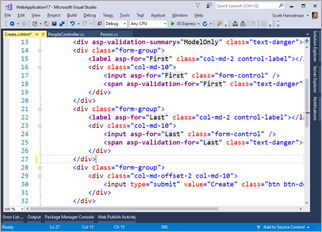

This little post is just a reminder that while Model Binding in ASP.NET is very cool, you should be aware of the properties (and semantics of those properties) that your object has, and whether or not your HTML form includes all your properties, or omits some.

This little post is just a reminder that while Model Binding in ASP.NET is very cool, you should be aware of the properties (and semantics of those properties) that your object has, and whether or not your HTML form includes all your properties, or omits some.